NLP with Python: Text Clustering

This article is Part 3 in a 5-Part Natural Language Processing with Python.

Introduction

Clustering is a process of grouping similar items together. Each group, also called as a cluster, contains items that are similar to each other. Clustering algorithms are unsupervised learning algorithms i.e. we do not need to have labelled datasets. There are many clustering algorithms for clustering including KMeans, DBSCAN, Spectral clustering, hierarchical clustering etc and they have their own advantages and disadvantages. The choice of the algorithm mainly depends on whether or not you already know how many clusters to create. Some algorithms such as KMeans need you to specify number of clusters to create whereas DBSCAN does not need you to specify. Another consideration is whether you need the trained model to able to predict cluster for unseen dataset. KMeans can be used to predict the clusters for new dataset whereas DBSCAN cannot be used for new dataset.

I’ve released a new hassle-free NLP library called jange. It is based on spacy and scikit-learn and provides very easy API for common NLP tasks. Here is a quick example to cluster documents.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20from jange import ops, stream, vis ds = stream.from_csv( "https://raw.githubusercontent.com/jangedoo/jange/master/dataset/bbc.csv", columns="news", context_column="type", ) # Extract clusters result_collector = <> clusters_ds = ds.apply( ops.text.clean.pos_filter("NOUN", keep_matching_tokens=True), ops.text.encode.tfidf(max_features=5000, name="tfidf"), ops.cluster.minibatch_kmeans(n_clusters=5), result_collector=result_collector, ) # Get features extracted by tfidf and reduce the dimensions features_ds = result_collector[clusters_ds.applied_ops.find_by_name("tfidf")] reduced_features = features_ds.apply(ops.dim.pca(n_dim=2)) # Visualization vis.cluster.visualize(reduced_features, clusters_ds)

If you are interested then visit Github page to install and get started.

In this post we’ll cluster news articles into different categories. First download the dataset from http://mlg.ucd.ie/files/datasets/bbc-fulltext.zip and extract. The dataset consists of 2225 documents and 5 categories: business, entertainment, politics, sport, and tech. For most part, we’ll ignore the labels but we’ll use them while evaluating the trained model since many of the evaluation metrics need the “true” labels.

Let’s import the required libraries.

import pandas as pd import numpy as np from sklearn.cluster import MiniBatchKMeans from sklearn.feature_extraction.text import TfidfVectorizer from sklearn.decomposition import PCA import matplotlib.pyplot as pltNow we can read the data. If you look at the extracted zip, you’ll see there are 5 folders each containing articles. Each folder indicates a category and the articles contained in that folder belong to that category. As said before we aren’t concerned about the categories but it is there. To load data in this kind of format, sklearn has easy utility function called load_files which load text files with categories as subfolder names.

from sklearn.datasets import load_files # for reproducibility random_state = 0 DATA_DIR = "./bbc/" data = load_files(DATA_DIR, encoding="utf-8", decode_error="replace", random_state=random_state) df = pd.DataFrame(list(zip(data['data'], data['target'])), columns=['text', 'label']) df.head()

| text | label | |

|---|---|---|

| 0 | Tate & Lyle boss bags top award\\n\\nTate & Lyle. | 0 |

| 1 | Halo 2 sells five million copies\\n\\nMicrosoft . | 4 |

| 2 | MSPs hear renewed climate warning\\n\\nClimate c. | 2 |

| 3 | Pavey focuses on indoor success\\n\\nJo Pavey wi. | 3 |

| 4 | Tories reject rethink on axed MP\\n\\nSacked MP . | 2 |

Feature extraction

For each article in our dataset, we’ll compute TF-IDF values. If you are not familiar with TF-IDF or feature extraction, you can read about them in the second part of this tutorial series called “Text Feature Extraction”.

vec = TfidfVectorizer(stop_words="english") vec.fit(df.text.values) features = vec.transform(df.text.values)

Now we have our feature matrix, we can feed to the model for training.

Model training

Let’s create an instance of KMeans . I’ll choose 5 as the number of clusters since the dataset contains articles that belong to one of 5 categories. Obviously, if you do not have labels then you won’t exactly know how many clusters to create so you have to find the best one that fits your needs via running multiple experiements and using domain knowledge to guide you.

Creating a model is pretty simple.

cls = MiniBatchKMeans(n_clusters=5, random_state=random_state) cls.fit(features)

That is all it takes to create and train a clustering model. Now to predict the clusters, we can call predict function of the model. Note that not all clustering algorithms can predit on new datasets. In that case, you can get the cluster labels of the data that you used when calling the fit function using labels_ attribute of the model.

# predict cluster labels for new dataset cls.predict(features) # to get cluster labels for the dataset used while # training the model (used for models that does not # support prediction on new dataset). cls.labels_

Visualization

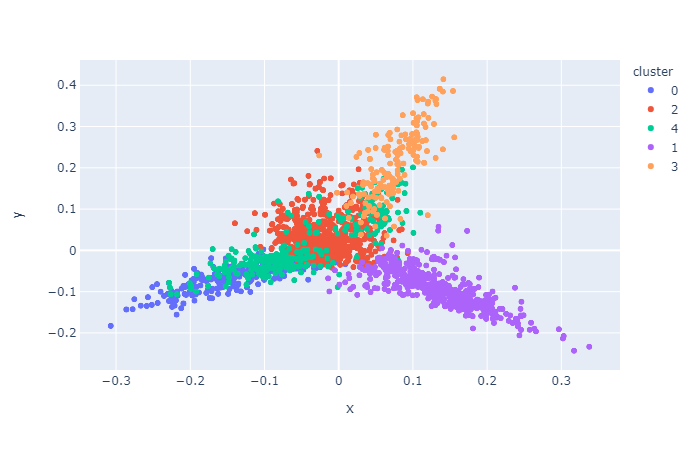

To visualize, we’ll plot the features in a 2D space. As we know the dimension of features that we obtained from TfIdfVectorizer is quite large ( > 10,000), we need to reduce the dimension before we can plot. For this, we’ll ues PCA to transform our high dimensional features into 2 dimensions.

# reduce the features to 2D pca = PCA(n_components=2, random_state=random_state) reduced_features = pca.fit_transform(features.toarray()) # reduce the cluster centers to 2D reduced_cluster_centers = pca.transform(cls.cluster_centers_)

Now that we have reduced our features and cluster centers into 2D, we can plot those points using a scatter plot. The first dimension will be used as X values and second dimension will be used as Y values in a XY plot. We also assign colors to each items using the predicted cluster labels so that items in a same cluster will be represented with same color. This is done by passing the labels to c parameter in scatter function. Again, if the clustering algorithm does not support predict function or if you want to visualize in the training data itself, you can also use c=cls.labels_ instead.

plt.scatter(reduced_features[:,0], reduced_features[:,1], c=cls.predict(features)) plt.scatter(reduced_cluster_centers[:, 0], reduced_cluster_centers[:,1], marker='x', s=150, c='b')

From the plot below we can see that apart from the rightmost cluster, others seems to be scattered all around and overlapping as well.

Evaluation

Evaluation for unsupervised learning algorithms is a bit difficult and requires human judgement but there are some metrics which you might use. There are two kinds of metrics you can use depending on whether or not you have the labels. For most of the clustering problems, you probably won’t have labels. If you had you’d do classification instead. But it is what it is. Here are a couple of them which I want to show you but you can read about other metrics on your own.

Evalauation with labelled dataset

If you have labelled dataset then you can use few metrics that give you an idea of how good your clustering model is. The one I’m going to show you here is homogeneity_score but you can find and read about many other metrics in sklearn.metrics module. As per the documentation, the score ranges between 0 and 1 where 1 stands for perfectly homogeneous labeling.

from sklearn.metrics import homogeneity_score homogeneity_score(df.label, cls.predict(features))

Кластерный анализ корпуса текстов

Иногда возникает необходимость провести анализ большого количества текстовых данных, не имея представления о содержании текстов. В таком случае можно попытаться разбить тексты на кластеры, и сгенерировать описание каждого кластера. Таким образом можно в первом приближении сделать выводы о содержании текстов.

Тестовые данные

В качестве тестовых данных был взят фрагмент новостного датасета от РИА, из которого в обработке участвовали только заголовки новостей.

Получение эмбеддингов

Для векторизации текста использовалась модель LaBSE от @cointegrated. Модель доступна на huggingface.

import numpy as np import torch from transformers import AutoTokenizer, AutoModel tokenizer = AutoTokenizer.from_pretrained("cointegrated/LaBSE-en-ru") model = AutoModel.from_pretrained("cointegrated/LaBSE-en-ru") sentenses = ['мама мыла раму'] embeddings_list = [] for s in sentences: encoded_input = tokenizer(s, padding=True, truncation=True, max_length=64, return_tensors='pt') with torch.no_grad(): model_output = model(**encoded_input) embedding = model_output.pooler_output embeddings_list.append((embedding)[0].numpy()) embeddings = np.asarray(embeddings_list)Кластеризация

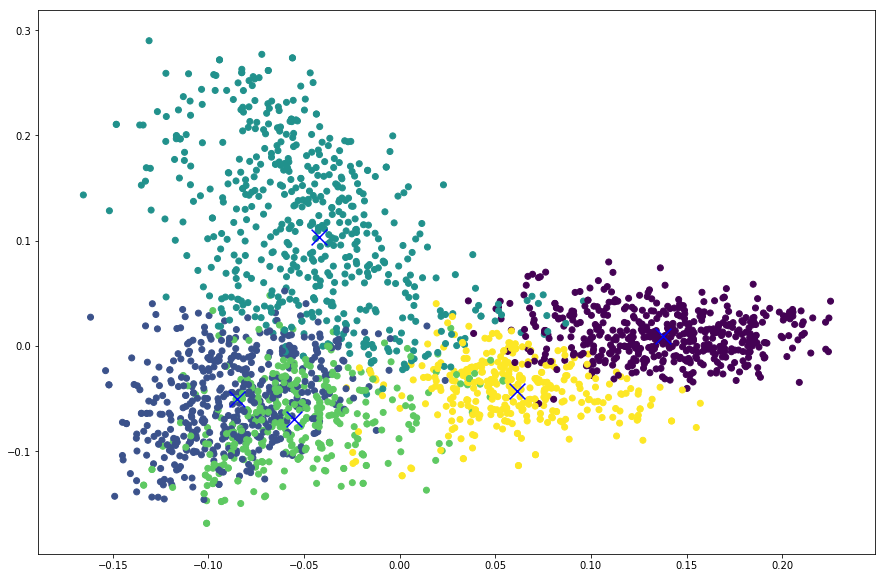

В качестве алгоритма для кластеризации был выбран алгоритм k-means. Выбран он для наглядности, часто приходится поиграться с данными и алгоритмами для получения адекватных кластеров.

Для нахождения оптимального количества кластеров будем использовать функцию, реализующую "правило локтя":

Функция поиска оптимального количества кластеров:

from sklearn.cluster import KMeans from sklearn.linear_model import LinearRegression from sklearn.metrics import mean_squared_error from sklearn.metrics.pairwise import cosine_similarity def determine_k(embeddings): k_min = 10 clusters = [x for x in range(2, k_min * 11)] metrics = [] for i in clusters: metrics.append((KMeans(n_clusters=i).fit(embeddings)).inertia_) k = elbow(k_min, clusters, metrics) return k def elbow(k_min, clusters, metrics): score = [] for i in range(k_min, clusters[-3]): y1 = np.array(metrics)[:i + 1] y2 = np.array(metrics)[i:] df1 = pd.DataFrame() df2 = pd.DataFrame() reg1 = LinearRegression().fit(np.asarray(df1.x).reshape(-1, 1), df1.y) reg2 = LinearRegression().fit(np.asarray(df2.x).reshape(-1, 1), df2.y) y1_pred = reg1.predict(np.asarray(df1.x).reshape(-1, 1)) y2_pred = reg2.predict(np.asarray(df2.x).reshape(-1, 1)) score.append(mean_squared_error(y1, y1_pred) + mean_squared_error(y2, y2_pred)) return np.argmin(score) + k_min k = determine_k(embeddings)Выделение информации о полученных кластерах

После кластеризации текстов берем для каждого кластера по несколько текстов, расположенных максимально близко от центра кластера.

Функция поиска близких к центру кластера текстов:

from sklearn.metrics.pairwise import euclidean_distances kmeans = KMeans(n_clusters = k_opt, random_state = 42).fit(embeddings) kmeans_labels = kmeans.labels_ data = pd.DataFrame() data['text'] = sentences data['label'] = kmeans_labels data['embedding'] = list(embeddings) kmeans_centers = kmeans.cluster_centers_ top_texts_list = [] for i in range (0, k_opt): cluster = data[data['label'] == i] embeddings = list(cluster['embedding']) texts = list(cluster['text']) distances = [euclidean_distances(kmeans_centers[0].reshape(1, -1), e.reshape(1, -1))[0][0] for e in embeddings] scores = list(zip(texts, distances)) top_3 = sorted(scores, key=lambda x: x[1])[:3] top_texts = list(zip(*top_3))[0] top_texts_list.append(top_texts)Саммаризация центральных текстов

Полученные центральные тексты можно попробовать слепить в общее описание кластера с помощью модели для саммаризации текста. Я использовал для этого модель ruT5 за авторством @cointegrated. Модель доступна на huggingface.

from transformers import T5ForConditionalGeneration, T5Tokenizer MODEL_NAME = 'cointegrated/rut5-base-absum' model = T5ForConditionalGeneration.from_pretrained(MODEL_NAME) tokenizer = T5Tokenizer.from_pretrained(MODEL_NAME) def summarize( text, n_words=None, compression=None, max_length=1000, num_beams=3, do_sample=False, repetition_penalty=10.0, **kwargs ): """ Summarize the text The following parameters are mutually exclusive: - n_words (int) is an approximate number of words to generate. - compression (float) is an approximate length ratio of summary and original text. """ if n_words: text = '[<>] '.format(n_words) + text elif compression: text = '[] '.format(compression) + text # x = tokenizer(text, return_tensors='pt', padding=True).to(model.device) x = tokenizer(text, return_tensors='pt', padding=True) with torch.inference_mode(): out = model.generate( **x, max_length=max_length, num_beams=num_beams, do_sample=do_sample, repetition_penalty=repetition_penalty, **kwargs ) return tokenizer.decode(out[0], skip_special_tokens=True) summ_list = [] for top in top_texts_list: summ_list.append(summarize(' '.join(list(top)))) Заключение

Представленный подход работает не на всех доменах - новости тут приятное исключение, и тексты такого типа разделяются достаточно хорошо и обычными методами. А вот с условным твиттером придется повозиться - обилие грамматических ошибок, жаргона, и отсутствие пунктуации могут стать кошмаром для любого аналитика. Замечания исправления и дополнения приветствуются!

Ссылки

С ноутбуком можно поиграться в колабе, ссылка в репозитории на гитхаб.