- Reading Huge File in Python

- 8 Answers 8

- Python: How to read huge text file into memory

- How to read large text files in Python?

- Problem with readline() method to read large text files

- Read large text files in Python using iterate

- How to Read Large Text Files in Python

- Reading Large Text Files in Python

- What if the Large File doesn’t have lines?

Reading Huge File in Python

I have a 384MB text file with 50 million lines. Each line contains 2 space-separated integers: a key and a value. The file is sorted by key. I need an efficient way of looking up the values of a list of about 200 keys in Python. My current approach is included below. It takes 30 seconds. There must be more efficient Python foo to get this down to a reasonable efficiency of a couple of seconds at most.

# list contains a sorted list of the keys we need to lookup # there is a sentinel at the end of list to simplify the code # we use pointer to iterate through the list of keys for line in fin: line = map(int, line.split()) while line[0] == list[pointer].key: list[pointer].value = line[1] pointer += 1 while line[0] > list[pointer].key: pointer += 1 if pointer >= len(list) - 1: break # end of list; -1 is due to sentinel entries = 24935502 # number of entries width = 18 # fixed width of an entry in the file padded with spaces # at the end of each line for i, search in enumerate(list): # list contains the list of search keys left, right = 0, entries-1 key = None while key != search and left key: left = mid + 1 else: right = mid - 1 if key != search: value = None # for when search key is not found search.result = value # store the result of the search I’d be interested to see your final solution, as it seems you put it together from answers that didn’t provide code.

8 Answers 8

If you only need 200 of 50 million lines, then reading all of it into memory is a waste. I would sort the list of search keys and then apply binary search to the file using seek() or something similar. This way you would not read the entire file to memory which I think should speed things up.

Slight optimization of S.Lotts answer:

from collections import defaultdict keyValues= defaultdict(list) targetKeys= # some list of keys as strings for line in fin: key, value = line.split() if key in targetKeys: keyValuesPython read big txt.append( value ) Since we’re using a dictionary rather than a list, the keys don’t have to be numbers. This saves the map() operation and a string to integer conversion for each line. If you want the keys to be numbers, do the conversion a the end, when you only have to do it once for each key, rather than for each of 50 million lines.

It’s not clear what «list[pointer]» is all about. Consider this, however.

from collections import defaultdict keyValues= defaultdict(list) targetKeys= # some list of keys for line in fin: key, value = map( int, line.split()) if key in targetKeys: keyValuesPython read big txt.append( value ) This is slower than the method I included in the question. 🙁 PS: I added a couple comments to my code snippet to explain it a bit better.

I would use memory-maping: http://docs.python.org/library/mmap.html.

This way you can use the file as if it’s stored in memory, but the OS decides which pages should actually be read from the file.

Here is a recursive binary search on the text file

import os, stat class IntegerKeyTextFile(object): def __init__(self, filename): self.filename = filename self.f = open(self.filename, 'r') self.getStatinfo() def getStatinfo(self): self.statinfo = os.stat(self.filename) self.size = self.statinfo[stat.ST_SIZE] def parse(self, line): key, value = line.split() k = int(key) v = int(value) return (k,v) def __getitem__(self, key): return self.findKey(key) def findKey(self, keyToFind, startpoint=0, endpoint=None): "Recursively search a text file" if endpoint is None: endpoint = self.size currentpoint = (startpoint + endpoint) // 2 while True: self.f.seek(currentpoint) if currentpoint <> 0: # may not start at a line break! Discard. baddata = self.f.readline() linestart = self.f.tell() keyatpoint = self.f.readline() if not keyatpoint: # read returned empty - end of file raise KeyError('key %d not found'%(keyToFind,)) k,v = self.parse(keyatpoint) if k == keyToFind: print 'key found at ', linestart, ' with value ', v return v if endpoint == startpoint: raise KeyError('key %d not found'%(keyToFind,)) if k > keyToFind: return self.findKey(keyToFind, startpoint, currentpoint) else: return self.findKey(keyToFind, currentpoint, endpoint) A sample text file created in jEdit seems to work:

>>> i = integertext.IntegerKeyTextFile('c:\\sampledata.txt') >>> i[1] key found at 0 with value 345 345 It could definitely be improved by caching found keys and using the cache to determine future starting seek points.

Python: How to read huge text file into memory

So each line in the file consists of a tuple of two comma separated integer values. I want to read in the whole file and sort it according to the second column. I know, that I could do the sorting without reading the whole file into memory. But I thought for a file of 500MB I should still be able to do it in memory since I have 1GB available. However when I try to read in the file, Python seems to allocate a lot more memory than is needed by the file on disk. So even with 1GB of RAM I’m not able to read in the 500MB file into memory. My Python code for reading the file and printing some information about the memory consumption is:

#!/usr/bin/python # -*- coding: utf-8 -*- import sys infile=open("links.csv", "r") edges=[] count=0 #count the total number of lines in the file for line in infile: count=count+1 total=count print "Total number of lines: ",total infile.seek(0) count=0 for line in infile: edge=tuple(map(int,line.strip().split(","))) edges.append(edge) count=count+1 # for every million lines print memory consumption if count%1000000==0: print "Position: ", edge print "Read ",float(count)/float(total)*100,"%." mem=sys.getsizeof(edges) for edge in edges: mem=mem+sys.getsizeof(edge) for node in edge: mem=mem+sys.getsizeof(node) print "Memory (Bytes): ", mem Total number of lines: 30609720 Position: (9745, 2994) Read 3.26693612356 %. Memory (Bytes): 64348736 Position: (38857, 103574) Read 6.53387224712 %. Memory (Bytes): 128816320 Position: (83609, 63498) Read 9.80080837067 %. Memory (Bytes): 192553000 Position: (139692, 1078610) Read 13.0677444942 %. Memory (Bytes): 257873392 Position: (205067, 153705) Read 16.3346806178 %. Memory (Bytes): 320107588 Position: (283371, 253064) Read 19.6016167413 %. Memory (Bytes): 385448716 Position: (354601, 377328) Read 22.8685528649 %. Memory (Bytes): 448629828 Position: (441109, 3024112) Read 26.1354889885 %. Memory (Bytes): 512208580 Already after reading only 25% of the 500MB file, Python consumes 500MB. So it seem that storing the content of the file as a list of tuples of ints is not very memory efficient. Is there a better way to do it, so that I can read in my 500MB file into my 1GB of memory?

How to read large text files in Python?

In this article, we will try to understand how to read a large text file using the fastest way, with less memory usage using Python.

To read large text files in Python, we can use the file object as an iterator to iterate over the file and perform the required task. Since the iterator just iterates over the entire file and does not require any additional data structure for data storage, the memory consumed is less comparatively. Also, the iterator does not perform expensive operations like appending hence it is time-efficient as well. Files are iterable in Python hence it is advisable to use iterators.

Problem with readline() method to read large text files

In Python, files are read by using the readlines() method. The readlines() method returns a list where each item of the list is a complete sentence in the file. This method is useful when the file size is small. Since readlines() method appends each line to the list and then returns the entire list it will be time-consuming if the file size is extremely large say in GB. Also, the list will consume a large chunk of the memory which can cause memory leakage if sufficient memory is unavailable.

Read large text files in Python using iterate

In this method, we will import fileinput module. The input() method of fileinput module can be used to read large files. This method takes a list of filenames and if no parameter is passed it accepts input from the stdin, and returns an iterator that returns individual lines from the text file being scanned.

Note: We will also use it to calculate the time taken to read the file using Python time.

How to Read Large Text Files in Python

While we believe that this content benefits our community, we have not yet thoroughly reviewed it. If you have any suggestions for improvements, please let us know by clicking the “report an issue“ button at the bottom of the tutorial.

Python File object provides various ways to read a text file. The popular way is to use the readlines() method that returns a list of all the lines in the file. However, it’s not suitable to read a large text file because the whole file content will be loaded into the memory.

Reading Large Text Files in Python

We can use the file object as an iterator. The iterator will return each line one by one, which can be processed. This will not read the whole file into memory and it’s suitable to read large files in Python. Here is the code snippet to read large file in Python by treating it as an iterator.

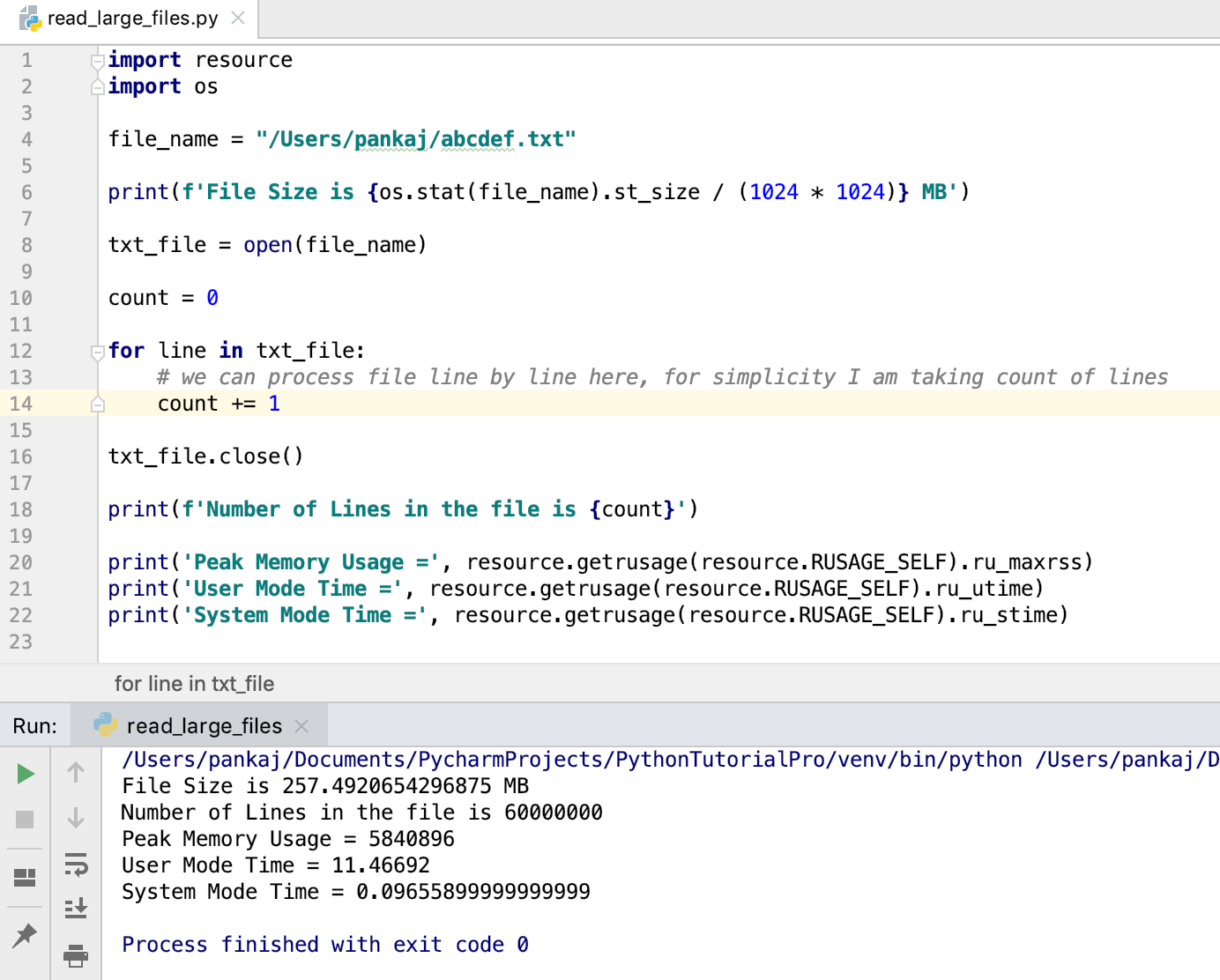

import resource import os file_name = "/Users/pankaj/abcdef.txt" print(f'File Size is MB') txt_file = open(file_name) count = 0 for line in txt_file: # we can process file line by line here, for simplicity I am taking count of lines count += 1 txt_file.close() print(f'Number of Lines in the file is ') print('Peak Memory Usage =', resource.getrusage(resource.RUSAGE_SELF).ru_maxrss) print('User Mode Time =', resource.getrusage(resource.RUSAGE_SELF).ru_utime) print('System Mode Time =', resource.getrusage(resource.RUSAGE_SELF).ru_stime) File Size is 257.4920654296875 MB Number of Lines in the file is 60000000 Peak Memory Usage = 5840896 User Mode Time = 11.46692 System Mode Time = 0.09655899999999999 - I am using os module to print the size of the file.

- The resource module is used to check the memory and CPU time usage of the program.

We can also use with statement to open the file. In this case, we don’t have to explicitly close the file object.

with open(file_name) as txt_file: for line in txt_file: # process the line pass What if the Large File doesn’t have lines?

The above code will work great when the large file content is divided into many lines. But, if there is a large amount of data in a single line then it will use a lot of memory. In that case, we can read the file content into a buffer and process it.

with open(file_name) as f: while True: data = f.read(1024) if not data: break print(data) The above code will read file data into a buffer of 1024 bytes. Then we are printing it to the console. When the whole file is read, the data will become empty and the break statement will terminate the while loop. This method is also useful in reading a binary file such as images, PDF, word documents, etc. Here is a simple code snippet to make a copy of the file.

with open(destination_file_name, 'w') as out_file: with open(source_file_name) as in_file: for line in in_file: out_file.write(line) Thanks for learning with the DigitalOcean Community. Check out our offerings for compute, storage, networking, and managed databases.