- urllib.error — Exception classes raised by urllib.request¶

- How to Fix HTTPError in Python

- HTTPError Attributes

- What Causes HTTPError

- Python HTTPError Examples

- 404 Not Found

- 400 Bad Request

- 401 Unauthorized

- 500 Internal Server Error

- How to Fix HTTPError in Python

- Track, Analyze and Manage Errors With Rollbar

- [Fixed] urllib.error.httperror: http error 403: forbidden

- What is a 403 error?

- Why urllib.error.httperror: http error 403: forbidden occurs?

- Why do sites use security that sends 403 responses?

- Resolving urllib.error.httperror: http error 403: forbidden?

- Method 1: using user-agent

- Method 2: using Session object

- Catching urllib.error.httperror

- FAQs

- Conclusion

urllib.error — Exception classes raised by urllib.request¶

The urllib.error module defines the exception classes for exceptions raised by urllib.request . The base exception class is URLError .

The following exceptions are raised by urllib.error as appropriate:

exception urllib.error. URLError ¶

The handlers raise this exception (or derived exceptions) when they run into a problem. It is a subclass of OSError .

The reason for this error. It can be a message string or another exception instance.

Changed in version 3.3: URLError has been made a subclass of OSError instead of IOError .

Though being an exception (a subclass of URLError ), an HTTPError can also function as a non-exceptional file-like return value (the same thing that urlopen() returns). This is useful when handling exotic HTTP errors, such as requests for authentication.

An HTTP status code as defined in RFC 2616. This numeric value corresponds to a value found in the dictionary of codes as found in http.server.BaseHTTPRequestHandler.responses .

This is usually a string explaining the reason for this error.

The HTTP response headers for the HTTP request that caused the HTTPError .

This exception is raised when the urlretrieve() function detects that the amount of the downloaded data is less than the expected amount (given by the Content-Length header). The content attribute stores the downloaded (and supposedly truncated) data.

How to Fix HTTPError in Python

The urllib.error.HTTPError is a class in the Python urllib library that represents an HTTP error. An HTTPError is raised when an HTTP request returns a status code that represents an error, such as 4xx (client error) or 5xx (server error).

HTTPError Attributes

The urllib.error.HTTPError class has the following attributes:

- code : The HTTP status code of the error.

- reason : The human-readable reason phrase associated with the status code.

- headers : The HTTP response headers for the request that caused the HTTPError .

What Causes HTTPError

Here are some common reasons why an HTTPError might be raised:

- Invalid or malformed request URL.

- Invalid or malformed request parameters or body.

- Invalid or missing authentication credentials.

- Server internal error or malfunction.

- Server temporarily unavailable due to maintenance or overload.

Python HTTPError Examples

Here are a few examples of HTTP errors in Python:

404 Not Found

import urllib.request import urllib.error try: response = urllib.request.urlopen('http://httpbin.org/status/404') except urllib.error.HTTPError as err: print(f'A HTTPError was thrown: ') In the above example, an invalid URL is attempted to be opened using the urllib.request.urlopen() function. Running the above code raises an HTTPError with code 404:

A HTTPError was thrown: 404 NOT FOUND 400 Bad Request

import urllib.request try: response = urllib.request.urlopen('http://httpbin.org/status/400') except urllib.error.HTTPError as err: if err.code == 400: print('Bad request!') else: print(f'An HTTP error occurred: ') In the above example, a bad request is sent to the server. Running the above code raises a HTTPError with code 400:

401 Unauthorized

import urllib.request import urllib.error try: response = urllib.request.urlopen('http://httpbin.org/status/401') except urllib.error.HTTPError as err: if err.code == 401: print('Unauthorized!') else: print(f'An HTTP error occurred: ') In the above example, a request is sent to the server with missing credentials. Running the above code raises a HTTPError with code 401:

500 Internal Server Error

import urllib.request import urllib.error try: response = urllib.request.urlopen('http://httpbin.org/status/500') except urllib.error.HTTPError as err: if err.code == 500: print('Internal server error!') else: print(f'An HTTP error occurred: ') In the above example, the server experiences an error internally. Running the above code raises a HTTPError with code 500:

How to Fix HTTPError in Python

To fix HTTP errors in Python, the following steps can be taken:

- Check the network connection and ensure it is stable and working.

- Check the URL being accessed and make sure it is correct and properly formatted.

- Check the request parameters and body to ensure they are valid and correct.

- Check whether the request requires authentication credentials and make sure they are included in the request and are correct.

- If the request and URL are correct, check the HTTP status code and reason returned in the error message. This can give more information about the error.

- Try adding error handling code for the specific error. For example, the request can be attempted again or missing parameters can be added to the request.

Track, Analyze and Manage Errors With Rollbar

Managing errors and exceptions in your code is challenging. It can make deploying production code an unnerving experience. Being able to track, analyze, and manage errors in real-time can help you to proceed with more confidence. Rollbar automates error monitoring and triaging, making fixing Python errors easier than ever. Try it today!

[Fixed] urllib.error.httperror: http error 403: forbidden

The urllib module can be used to make an HTTP request from a site, unlike the requests library, which is a built-in library. This reduces dependencies. In the following article, we will discuss why urllib.error.httperror: http error 403: forbidden occurs and how to resolve it.

What is a 403 error?

The 403 error pops up when a user tries to access a forbidden page or, in other words, the page they aren’t supposed to access. 403 is the HTTP status code that the webserver uses to denote the kind of problem that has occurred on the user or the server end . For instance, 200 is the status code for – ‘everything has worked as expected, no errors’. You can go through the other HTTP status code from here.

Why urllib.error.httperror: http error 403: forbidden occurs?

ModSecurity is a module that protects websites from foreign attacks. It checks whether the requests are being made from a user or from an automated bot. It blocks requests from known spider/bot agents who are trying to scrape the site. Since the urllib library uses something like python urllib/3.3.0 hence, it is easily detected as non-human and therefore gets blocked by mod security.

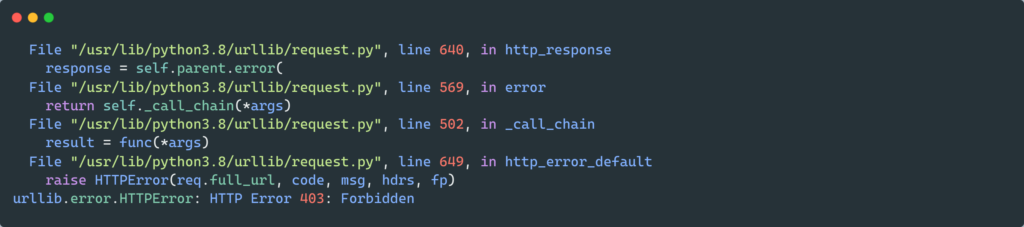

from urllib import request from urllib.request import Request, urlopen url = "https://www.gamefaqs.com" request_site = Request(url) webpage = urlopen(request_site).read() print(webpage[:200])

ModSecurity blocks the request and returns an HTTP error 403: forbidden error if the request was made without a valid user agent. A user-agent is a header that permits a specific string which in turn allows network protocol peers to identify the following:

- The Operating System, for instance, Windows, Linux, or macOS.

- Websserver’s browser

Moreover, the browser sends the user agent to each and every website that you get connected to. The user-Agent field is included in the HTTP header when the browser gets connected to a website. The header field data differs for each browser.

Why do sites use security that sends 403 responses?

According to a survey, more than 50% of internet traffic comes from automated sources. Automated sources can be scrapers or bots. Therefore it gets necessary to prevent these attacks. Moreover, scrapers tend to send multiple requests, and sites have some rate limits. The rate limit dictates how many requests a user can make. If the user(here scraper) exceeds it, it gets some kind of error, for instance, urllib.error.httperror: http error 403: forbidden.

Resolving urllib.error.httperror: http error 403: forbidden?

This error is caused due to mod security detecting the scraping bot of the urllib and blocking it. Therefore, in order to resolve it, we have to include user-agent/s in our scraper. This will ensure that we can safely scrape the website without getting blocked and running across an error. Let’s take a look at two ways to avoid urllib.error.httperror: http error 403: forbidden.

Method 1: using user-agent

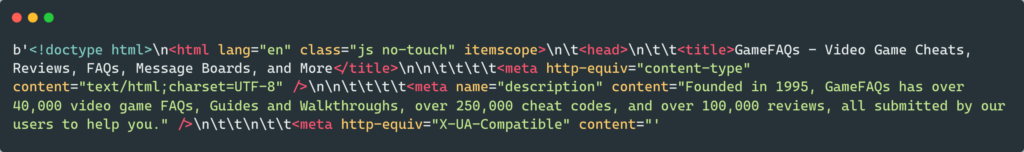

from urllib import request from urllib.request import Request, urlopen url = "https://www.gamefaqs.com" request_site = Request(url, headers=) webpage = urlopen(request_site).read() print(webpage[:500])

- In the code above, we have added a new parameter called headers which has a user-agent Mozilla/5.0. Details about the user’s device, OS, and browser are given by the webserver by the user-agent string. This prevents the bot from being blocked by the site.

- For instance, the user agent string gives information to the server that you are using Brace browser and Linux OS on your computer. Thereafter, the server accordingly sends the information.

Method 2: using Session object

There are times when even using user-agent won’t prevent the urllib.error.httperror: http error 403: forbidden. Then we can use the Session object of the request module. For instance:

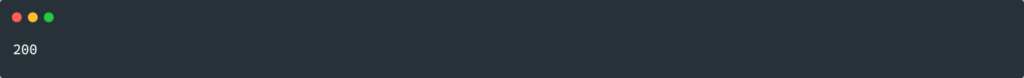

from random import seed import requests url = "https://www.gamefaqs.com" session_obj = requests.Session() response = session_obj.get(url, headers=) print(response.status_code)

The site could be using cookies as a defense mechanism against scraping. It’s possible the site is setting and requesting cookies to be echoed back as a defense against scraping, which might be against its policy.

The Session object is compatible with cookies.

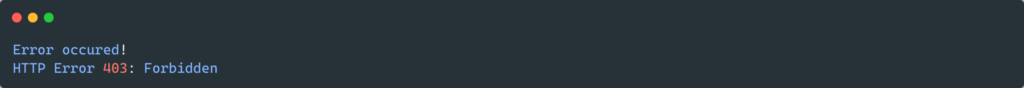

Catching urllib.error.httperror

urllib.error.httperror can be caught using the try-except method. Try-except block can capture any exception, which can be hard to debug. For instance, it can capture exceptions like SystemExit and KeyboardInterupt. Let’s see how can we do this, for instance:

from urllib.request import Request, urlopen from urllib.error import HTTPError url = "https://www.gamefaqs.com" try: request_site = Request(url) webpage = urlopen(request_site).read() print(webpage[:500]) except HTTPError as e: print("Error occured!") print(e) FAQs

You can try the following steps in order to resolve the 403 error in the browser try refreshing the page, rechecking the URL, clearing the browser cookies, check your user credentials.

Scrapers often don’t use headers while requesting information. This results in their detection by the mod security. Hence, scraping modules often get 403 errors.

Conclusion

In this article, we covered why and when urllib.error.httperror: http error 403: forbidden it can occur. Moreover, we looked at the different ways to resolve the error. We also covered the handling of this error.

![[Fixed] urllib.error.httperror http error 403 forbidden](https://www.pythonpool.com/urllib-error-httperror-http-error-403-forbidden/http-error-403-forbidden.webp)