- Debugging Python server memory leaks with the Fil profiler

- A trivial leaky server

- Simulating traffic

- Finding the leak with Fil

- Next steps

- Learn even more techniques for reducing memory usage—read the rest of the Larger-than-memory datasets guide for Python.

- Consulting services: take your code from prototype to production

- Learn practical Python software engineering skills you can use at your job

- How to Fix Memory Leaks in Python?

- Prerequisities

- Table of contents

- What is a memory leak?

- What causes memory leaks in Python?

- Large objects lingering in the memory that aren’t released

- Reference styles in the code

- Underlying libraries

- Methods to fix memory leaks

- The use of debugging method to solve memory leaks

- Application of tracemalloc to sort memory leak issues in Python

- Conclusion

Debugging Python server memory leaks with the Fil profiler

Your server is running just fine, handling requests and sending responses. But then, ever so slowly, memory usage creeps up, and up, and up–until eventually your process runs out of memory and crashes. And then it restarts, and the leaking starts all over again.

In order to fix memory leaks, you need to figure out where that memory is being allocated. And that can be tricky, unless you use the right tools.

Let’s see how you can identify the exact lines of code that are leaking by using the Fil memory profiler.

A trivial leaky server

In order to simulate a memory leak, I’m going to be running the following simple Flask server:

from flask import Flask app = Flask(__name__) cache = <> @app.route("/") def index(page): if page not in cache: cache[page] = f"Welcome to page> " return cache[page] if __name__ == "__main__": app.run() This is a simplified example of caching an expensive operation so it only has to be run once. Unfortunately, it doesn’t limit the size of the cache, so if the server starts getting random queries, the cache will grow indefinitely, thus leaking memory.

Since this is a trivial example, you can figure out the memory leak in this code simply by reading it, but in the real world it’s not so easy. That’s where profilers come in.

Let’s see the leak in action, and then see how Fil can help you spot it.

Simulating traffic

In order to trigger the leak, we’ll send a series of random requests to the server using the following script:

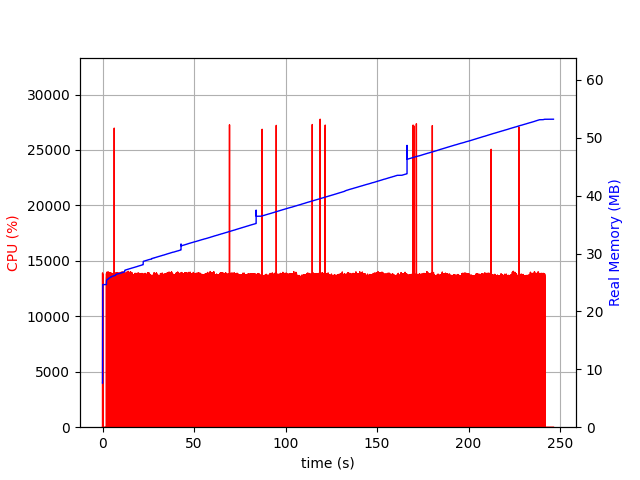

from random import random from requests import get while True: get(f"http://localhost:5000/random()>") If we run the server using psrecord, and run this random request script, we can see how memory grows over time:

$ psrecord --plot memory.png "python leaky.py" Here’s what the CPU and memory usage looks like after sending traffic to the server for a while:

As you can see, the more queries, the more memory usage goes up.

Finding the leak with Fil

So what code exactly is causing the leak? For a real application with a large code base, figuring this out can be quite difficult.

This is where Fil comes in. Fil is a memory profiler that records the peak memory usage of your application, as well as which code allocated it. While Fil was designed for scientific and data science applications, it turns out that recording peak memory works quite well for detecting memory leaks as well.

Consider the memory usage graph above. If you have a memory leak, the more time passes, the higher memory usage is. Eventually memory use is dominated by the leak–which means inspecting peak memory usage will tell us where the memory leak is.

After installing Fil by doing pip install filprofiler or conda install -c conda-forge filprofiler , I can run the program under Fil, and again generate some simulated traffic:

$ fil-profile run leaky.py =fil-profile= Memory usage will be written out at exit, and opened automatically in a browser. =fil-profile= You can also run the following command while the program is still running to write out peak memory usage up to that point: kill -s SIGUSR2 1285041 * Serving Flask app "__init__" (lazy loading) * Environment: production WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead. * Debug mode: off * Running on http://127.0.0.1:5000/ (Press CTRL+C to quit)127.0.0.1 - - [06/Aug/2020 10:57:29] "GET /0.8582927186632662 HTTP/1.1" 200 - 127.0.0.1 - - [06/Aug/2020 10:57:29] "GET /0.04644592969681438 HTTP/1.1" 200 - 127.0.0.1 - - [06/Aug/2020 10:57:29] "GET /0.7531563433260747 HTTP/1.1" 200 - 127.0.0.1 - - [06/Aug/2020 10:57:29] "GET /0.6687347621524576 HTTP/1.1" 200 - . Eventually I hit Ctrl-C, and get the following report:

If you follow the widest, reddest frames towards the bottom of the graph, you’ll see that line 11 of leaky.py , the line that generates the HTML and adds it to the cache, is responsible for 47% of the memory usage. The longer the server runs, the higher that percentage will be.

If you click on that frame, you’ll also get the full traceback.

Next steps

If you want to try this yourself, make sure you’re using Fil 0.9.0 or later (which in return requires pip version 19 or later). Older versions of Fil are much slower and have much more memory overhead when profiling code that does lots of allocations.

There are some caveats to using Fil:

- There is some overhead: it runs 2-3× as slowly depending on the workload.

- You need to run the program under Fil, you can’t attach to already running processes.

Nonetheless, if you’re trying to debug a memory leak in your server, do give Fil a try: it will help you spot the exact parts of your application that are leaking.

Learn even more techniques for reducing memory usage—read the rest of the Larger-than-memory datasets guide for Python.

Consulting services: take your code from prototype to production

You have a working Python prototype for your data processing algorithm. Now you need to get it ready for production. Which means your software needs to be fast, robust, maintainable, cost-efficient, and scalable.

With more than 25 years experience of shipping software to production, I can help you:

- Speed up your code so it can get results on time, and run at scale with an affordable operating budget.

- Learn about tools, techniques, and process improvements that will help you ship best-practices software, on schedule.

To get in touch about consulting services, send me an email at itamar@pythonspeed.com.

Learn practical Python software engineering skills you can use at your job

Sign up for my newsletter, and join over 7000 Python developers and data scientists learning practical tools and techniques, from Python performance to Docker packaging, with a free new article in your inbox every week.

How to Fix Memory Leaks in Python?

In Python, memory storage plays a significant role, but it can lead to storage issues because of memory leaks. Memory is key to keeping any program working efficiently. Memory helps programs take instructions and store data.

If unused data piles up and you forget to delete it, your Python program will experience memory leaks. For Python, to offer optimal performance, you are supposed to diagnose memory leaks and resolve them.

But, how can this be achieved?

You need to understand what a memory leak is, its causes, and the methods you can use to solve such memory issues. Make sure you perform memory profiling to help determine the memory utilized by every part of the Python code.

Prerequisities

As a prerequisite, the reader must have an intermediate level of understanding in Python, with a little knowledge about memory management.

Table of contents

What is a memory leak?

A memory leak is the incorrect management of memory allocations by a computer program where the unneeded memory isn’t released. When unused objects pile up in the memory, your program faces a memory leak.

The occurrence of a memory leak fills up the program’s storage, thus reducing storage space. With a lack of space, the program may be destroyed or starts working slowly.

As a programmer, you may create large volumes of memory and then fail to release any. If your application uses more memory and doesn’t release any, it exhausts the server’s memory pool within time. That may cause your application to crash the next time it consumes more memory.

Memory leaks were more prevalent when programmers only used C & C++. This is because one was required to free memory from the application manually.

More memory is used when an application is running and outdated data piles up in the register. Then, the program stops to run which purposely frees memory. However, the program may stop functioning if the application crashes.

What causes memory leaks in Python?

The Python program, just like other programming languages, experiences memory leaks. Memory leaks in Python happen if the garbage collector doesn’t clean and eliminate the unreferenced or unused data from Python.

Python developers have tried to address memory leaks through the addition of features that free unused memory automatically.

However, some unreferenced objects may pass through the garbage collector unharmed, resulting in memory leaks.

Below are factors that may cause memory leaks in Python:

Large objects lingering in the memory that aren’t released

Lingering objects occur when the domain controller can’t replicate for a time interval longer than the tombstone lifetime.

The domain controller then reconnects to replication topology.

If you delete an object from the active directory service when the domain controller is offline, the object stays in the domain controller as a lingering object. It’s those lingering objects that consume space leading to the occurrence of memory leaks.

Reference styles in the code

Referencing style will determine whether memory leaks will occur or will be avoided.

A reference has an address and class information concerning objects being referenced. Assigning references doesn’t create distinct duplicate objects. But, if an object is no longer in use and can’t be garbage collected because it’s being referenced in another place within the application, it results in memory leaks.

Various types of references are used in code referencing, and they have different abilities to be garbage collected.

A strong reference style is the most convenient to use in daily programming. But any object with a strong reference attached to it makes it hard for garbage collection. In such a case, when such objects pile up, they cause memory leaks.

Underlying libraries

Python uses multiple libraries for visualization, modeling, and data processing. Though Python libraries make Python data tasks much easier, they have been linked to memory leaks.

Methods to fix memory leaks

It is essential to diagnose and fix memory leaks before they crash a program. Python memory manager solves issues related to Python memory leaks. The application can read and even write data.

Besides, memory management works to erase any unused data from memory. That helps to promote the efficiency of memory since all unused data is cleansed from memory.

The inbuilt CPython found in Python, functions to ensure garbage collector picks unused and unreferenced data for elimination from the memory.

If you are a programmer using Python, there is no need to worry about memory leaks. CPython automatically notifies the garbage collector to eliminate all the garbage from memory that comes from unreferenced data.

Though memory leaks issues can be sorted by garbage collector automatically, sometimes it may fail. That’s why you need to apply some methods to clear any issue connected to a memory leak.

The use of debugging method to solve memory leaks

You’ll have to debug memory usage in Python using the garbage collector inbuilt module. That will provide you a list of objects known by the garbage collectors.

Debugging allows you to see where much of the Python storage memory is being applied. Then, you can go ahead and filter everything based on usage.

In case, you find objects that aren’t in use, and maybe they are referenced, you can get rid of them by deleting them to avoid memory leaks.

Application of tracemalloc to sort memory leak issues in Python

Among the advantages of using Python is top-notch inbuilt features like tracemalloc. The module offers you a quick and effective solution when it comes to memory leaks in Python. You can use tracemalloc to link an object with the exact place where it was allocated first.

Tracemalloc enables you to establish the use of a specific common function that is using memory within your program. It provides a track of memory usage by an object. You can apply that information to find out the cause of all the memory leaks. Once you get the objects leading to a memory leak, you can fix or even eliminate them.

Conclusion

Python is among the best programming languages in use today. But memory leaks can destroy or lead to a slow function of the program.

However, one can quickly fix all issues connected to memory leaks in Python. Among other methods, one may use debugging or tracemalloc, which helps track memory usage and clear objects associated with memory leaks.

Peer Review Contributions by: Srishilesh P S