- Analysing Response Time Differences from Apache Logs from PHP5.6 to PHP7.3

- Getting Logs

- Creating the Dataframe

- Basic Data Analysis

- Things I found analysing the logs of Other Sites

- Conclusion

- Find server response time in PHP

- Find server response time in PHP

- PHP CURL response time bypass

- Very long loadtime php curl

- PHP script to check http response time for a particualar URL

Analysing Response Time Differences from Apache Logs from PHP5.6 to PHP7.3

Recently I added response time to my apache logs, to keep track of how long the server response took.

To do that, in your apache config apache2.conf , add %D :

LogFormat "%v:%p %h %l %u %t \"%r\" %>s %O \"%i\" \"%i\" %D" vhost_combined # Added response time %D LogFormat "%h %l %u %t \"%r\" %>s %O \"%i\" \"%i\" %D" combined Now about 7 days after implementing this I updated the PHP version on the server to php 7.3 .

Getting Logs

I copied the logs to a folder with (all those after the 17th of October):

find . -type f -newermt 2019-10-17 | xargs cp -t /home/stephen/response_time/Now that I have the logs, what I want to do is unzip them and aggregate them all into a single pandas dataframe.

Creating the Dataframe

Just get the damn data it doesn’t need to be pretty:

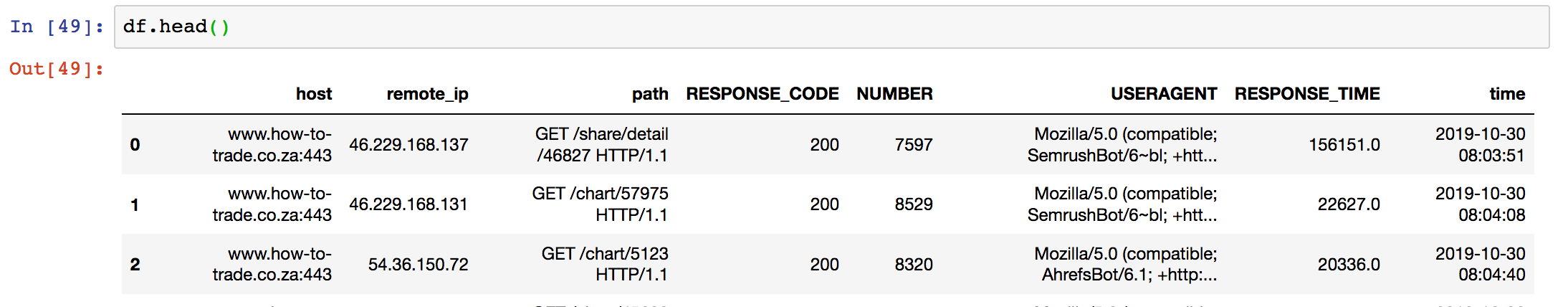

# www.how-to-trade.co.za:443 105.226.233.14 - - [30/Oct/2019:16:27:11 +0200] "GET /feed/history?symbol=IMP&resolution=D&from=1571581631&to=1572445631 HTTP/1.1" 200 709 "https://how-to-trade.co.za/chart/view" "Mozilla/5.0 (Windows NT 10.0; WOW64; Trident/7.0; rv:11.0) like Gecko" 35959 fields = ['host', 'remote_ip', 'A', 'B', 'date_1', 'timezone', 'path', 'RESPONSE_CODE', 'NUMBER', 'unknown', 'USERAGENT', 'RESPONSE_TIME'] df = pd.DataFrame() for i in range(15): data = pd.read_csv('how-to-trade-access.log..gz'.format(i), compression='gzip', sep=' ', header=None, names=fields, na_values=['-']) time = data.date_1 + data.timezone time_trimmed = time.map(lambda s: s.strip('[]').split('-')[0].split('+')[0]) # Drop the timezone for simplicity data['time'] = pd.to_datetime(time_trimmed, format='%d/%b/%Y:%H:%M:%S') data = data.drop(columns=['date_1', 'timezone', 'A', 'B', 'unknown']) df = pd.concat([df, data]) So now we have a nice data frame…

Basic Data Analysis

So we are just looking at the how-to-trade.co.za site.

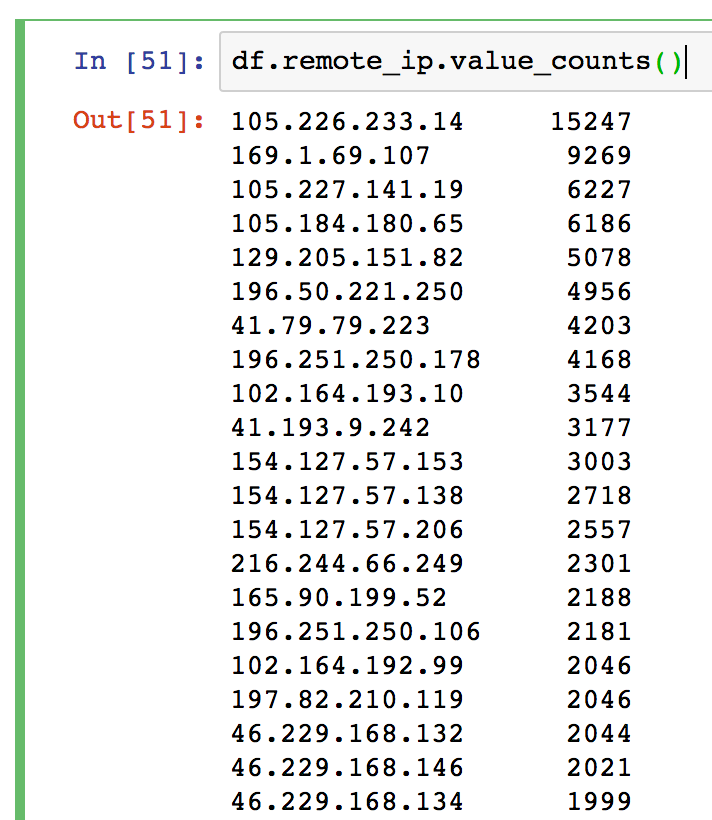

Most frequent IPs

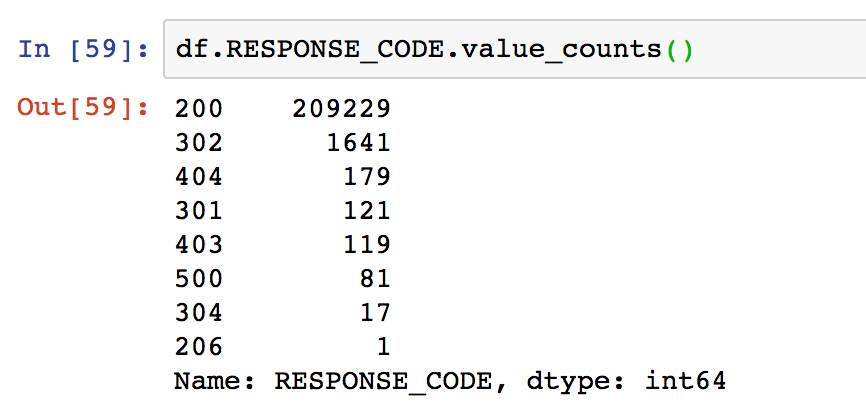

Most Common Response Codes

Interesting is the one 206 Partial Content.

304 Not modified, the 500 internal server errors need to be looked into. The rest are pretty standard.

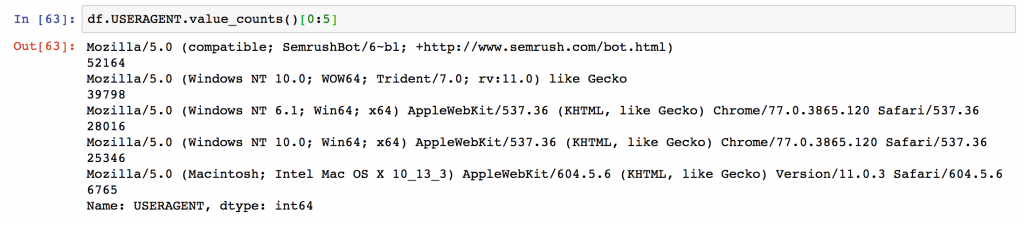

UserAgents

Checking the top 5 useragents, it seems that semrush is an abuser.

Very important that I add this to my robots.txt

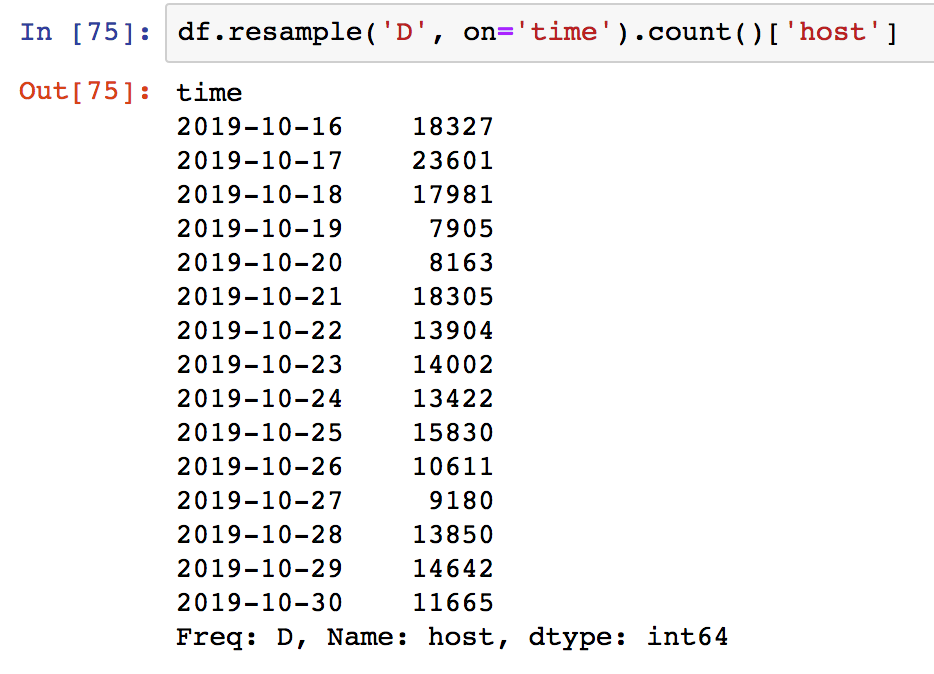

Number of Responses per Day

It would be nice if I could put a percentage here and also a day of the week so I can make some calls.

I made the change on 17 October, so I need to drop all rows that don’t have a response time.

I then changed to PHP7.3 on 23 October 2019.

I then got the logs today.

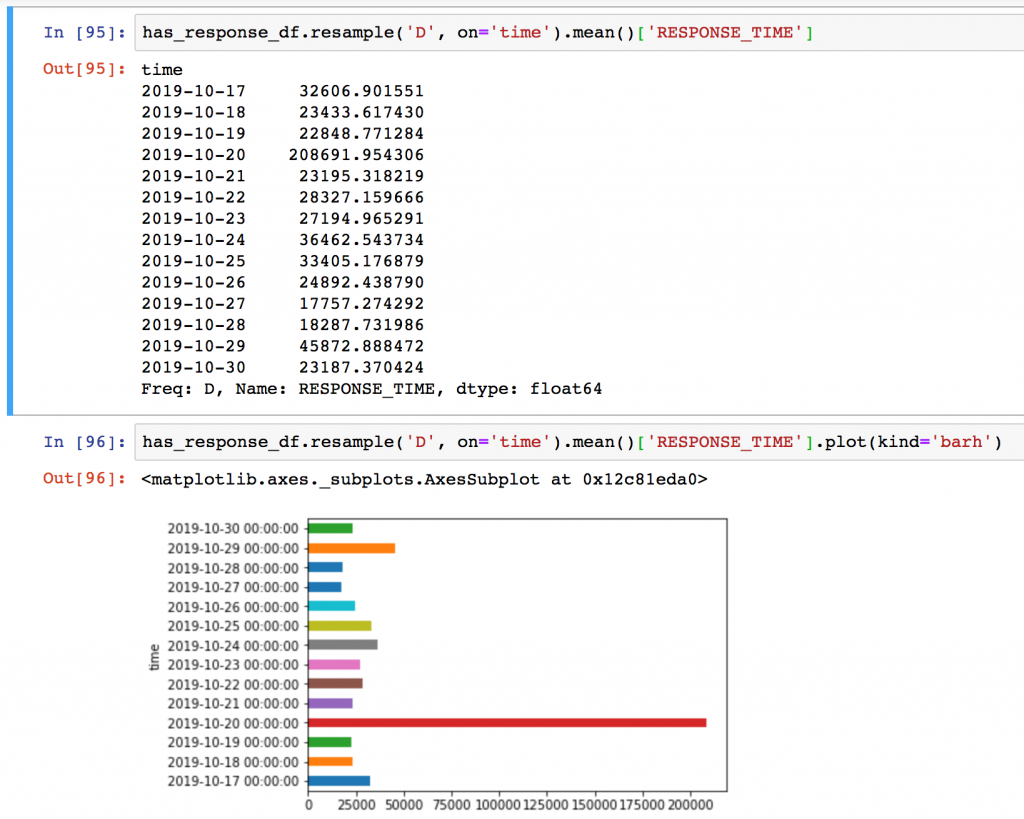

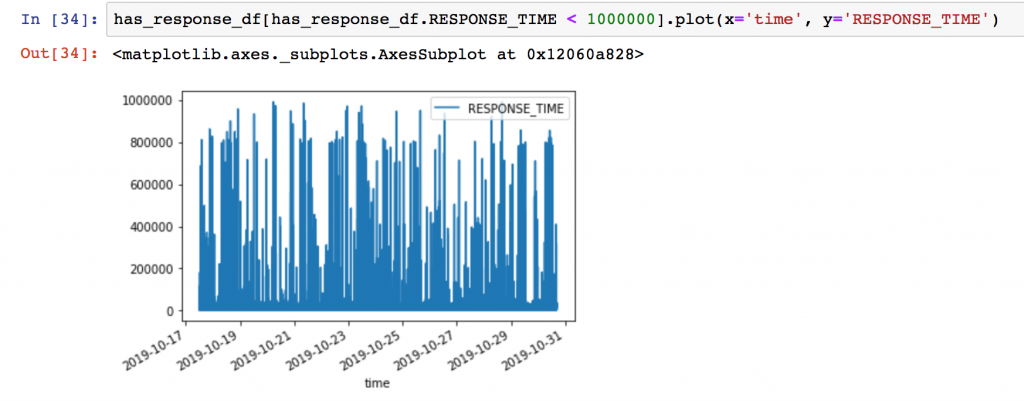

So let me get an average of the response times (in milliseconds).

Average Response Time

I get the mean response time and the chart and it doesn’t really show a big drop after 17 October 2019

Apache gives the response time in microseconds, so 32606 microseconds is 32.61 milliseconds.

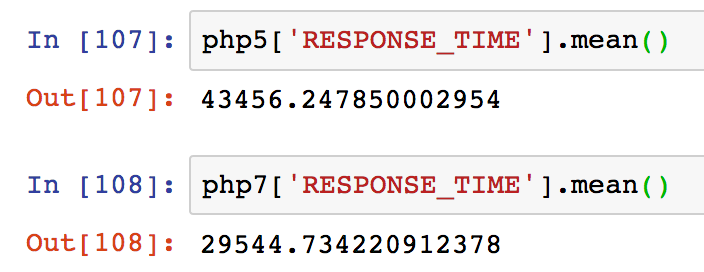

PHP5 vs PHP7 Benchmarked

Here is the data on the mean response times:

For PHP7.3 the mean response time was: 29.544 ms

For PHP5.6 the mean response time was: 43.456 ms

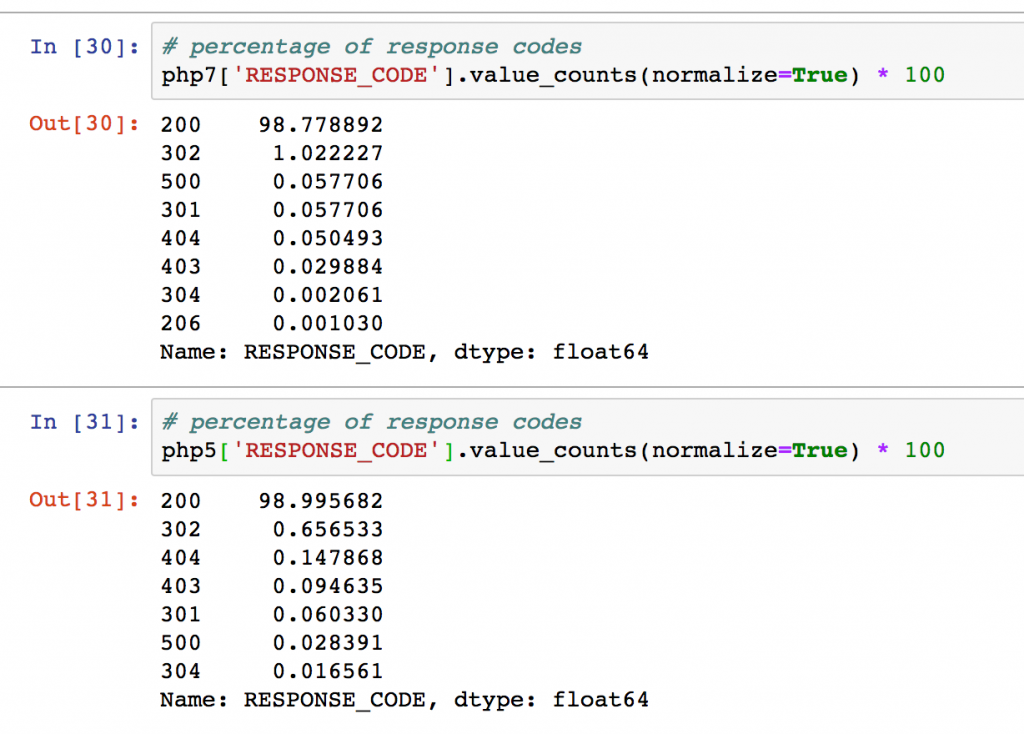

Error Rates

Looking at the error codes of the response codes and getting the percentage, nothing stands out particularly. An increase in 500 errors that might need to be checked out on PHP7.

Finding Slow Pages

Finding Slow Pages

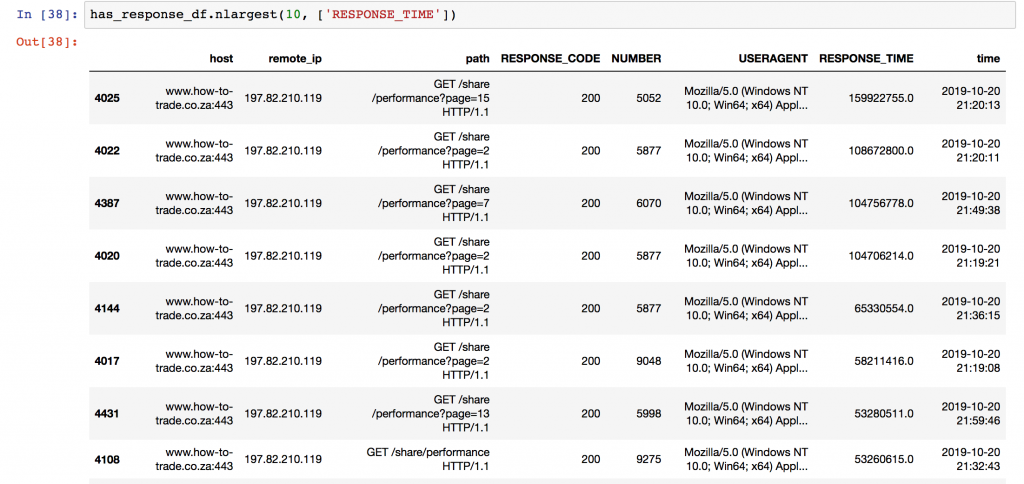

Now lets find what pages are really slow and look to remove or change them:

It is clear that the /share/performance page is a problem.

Things I found analysing the logs of Other Sites

Check the bots that are getting your stuff. I found there was a turnitin crawler, that is used to find plaguirism. The thing is – I don’t mind if people copy that word for word. I don’t want them to get in trouble for it.

Conclusion

So not the most scientifically correct study but there is enough evidence here to say that:

Response time on PHP7.3 is on average 13.912ms faster, which is a 32.01% increase in response time.

From our tests PHP7.3 is 32.01% faster in terms of page response time

Maximum and median response times also indicate the same trend.

If you have ideas on improving the data science and analytics of this post I would love that!

Find server response time in PHP

-Need of running a cron job every minute or constantly. -Situations when user request is minimal or API is super fast at the moment, entire request may only take 2-3 seconds. Although the API sends separate response to url endpoint of my choice, and it seems safe to terminate transmission after posting request, there might be situation when those few seconds may not be enough time to even establish the connection with API.

Find server response time in PHP

I am using the following code to find the server response time.

I tested some websites using this code, and it returns that server response time is around 40 to 120 milliseconds. But when i open these sites its taking me around 2-4 seconds to get the first byte.

The server response time calculated by https://developers.google.com/speed/pagespeed/insights/ is also almost 2-4 seconds. So whats wrong with that code?

You make no HTTP request to the server, but open a socket. What you are measuring here is not a server response time but a connection time.

You can try to load a page to get total connection, response, and data transfer time, e.g.:

file_get_contents("http://$domain/") Or you can use curl for even more control.

PHP Async Curl POST is slowing down my code in 500 ms, When you do a CURL for the first time a TCP connection is established. When the data is sent the connection is Kept Alive so it can be

PHP CURL response time bypass

I have an CURL request, where at times, and also depending on the request load, it takes minutes to receive response from the API, due to processing and calculations.

For sake of good user experience, this behavior is undesired to have. While waiting for response, sometimes long time, user is unable to perform any functions on the website.

So I am looking for solutions on how to go by doing it so the user can use the application while this application waits for results from API.

Solutions I have already considered.

- Recording the request and using cron job to process it. Unfortunately there is couple of pitfalls to that. -Need of running a cron job every minute or constantly. -Situations when user request is minimal or API is super fast at the moment, entire request may only take 2-3 seconds. But when using cron and it was requested let’s say 30 seconds ahead of time for next cron job run, you end up with result of 32 second turn around. So this solution may improve some, and worsen some, not sure if I really like that.

- Aborting CURL request after few seconds. Although the API sends separate response to url endpoint of my choice, and it seems safe to terminate transmission after posting request, there might be situation when those few seconds may not be enough time to even establish the connection with API. I guess what I am trying to say is that, with terminating CURL I have no way of knowing if the actual request made it through.

Is there another approach that I could consider? Thank you.

Sounds like what you’re asking for is an asynchronous cURL call.

Here’s the curl_multi_init documentation

PHP CURL response time bypass, -Need of running a cron job every minute or constantly. -Situations when user request is minimal or API is super fast at the moment, entire

Very long loadtime php curl

I’m trying to make a site for my battlefield 1 clan, on one of the pages i’d like to display our team and some of their stats.

This API allows me to request just what I need, I decide to use php curl requests to get this data on my site. It all works perfectly fine, but it is super slow, sometimes it even reaches the 30s max of php.

query("SELECT * FROM bfplayers"); while($row = mysqli_fetch_assoc($data)) < $psnid = $row['psnid']; $ch = curl_init(); curl_setopt($ch, CURLOPT_URL, "https://battlefieldtracker.com/bf1/api/Stats/BasicStats?platform=2&displayName=".$psnid); curl_setopt($ch, CURLOPT_RETURNTRANSFER, TRUE); curl_setopt($ch, CURLOPT_HEADER, FALSE); curl_setopt($ch, CURLOPT_SSL_VERIFYPEER, false); curl_setopt($ch, CURLOPT_SSL_VERIFYHOST, false); $headers = [ 'TRN-Api-Key: MYKEY', ]; curl_setopt($ch, CURLOPT_HTTPHEADER, $headers); $response = curl_exec($ch); curl_close($ch); $result = json_decode($response, true); print($result['profile']['displayName']); >?> I have no idea why it’s going this slow, is it because I am using xamp on localhost or because the requests are going through a loop?

your loop is not optimized in the slightest, i believe if you optimized your loop code, your code could run A LOT faster. you create and delete the curl handle on each iteration, when you could just keep re-using the same curl handle on each player (this would use less cpu and be faster), you don’t use compressed transfer (enabling compression would probably make the transfer faster), and most importantly, you run the api calls sequentially, i believe if you did the api requests in parallel, it would load much faster. also, you don’t urlencode psnid, that’s probably a bug. try this

query ( "SELECT * FROM bfplayers" ); while ( ($row = mysqli_fetch_assoc ( $data )) ) < $psnid = $row ['psnid']; $tmp = array (); $tmp [0] = ($ch = curl_init ()); $tmp [1] = tmpfile (); $curls [] = $tmp; curl_setopt_array ( $ch, array ( CURLOPT_URL =>"https://battlefieldtracker.com/bf1/api/Stats/BasicStats?platform=2&displayName=" . urlencode ( $psnid ), CURLOPT_ENCODING => '', CURLOPT_SSL_VERIFYPEER => false, CURLOPT_SSL_VERIFYHOST => false, CURLOPT_HTTPHEADER => array ( 'TRN-Api-Key: MYKEY' ), CURLOPT_FILE => $tmp [1] ) ); curl_multi_add_handle ( $cmh, $ch ); curl_multi_exec ( $cmh, $active ); > do < do < $ret = curl_multi_exec ( $cmh, $active ); >while ( $ret == CURLM_CALL_MULTI_PERFORM ); curl_multi_select ( $cmh, 1 ); > while ( $active ); foreach ( $curls as $curr ) < fseek ( $curr [1], 0, SEEK_SET ); // https://bugs.php.net/bug.php?id=76268 $response = stream_get_contents ( $curr [1] ); $result = json_decode ( $response,true ); print ($result ['profile'] ['displayName']) ; >// the rest is just cleanup, the client shouldn't have to wait for this // OPTIMIZEME: apache version of fastcgi_finish_request() ? if (is_callable ( 'fastcgi_finish_request' )) < fastcgi_finish_request (); >foreach ( $curls as $curr ) < curl_multi_remove_handle ( $cmh, $curr [0] ); curl_close ( $curr [0] ); fclose ( $curr [1] ); >curl_multi_close ( $cmh ); - it runs all api calls in parallel, and use transfer compression (CURLOPT_ENCODING), and runs api requests in parallel with downloading results from the db, and it tries to disconnect the client before running cleanup routines, it will probably run much faster.

also, if mysqli_fetch_assoc() are causing slow roundtrips to your db, it would probably be even faster to replace it with mysqli_fetch_all()

also, something that would probably be much faster than this, would be to have a cronjob run every minute (or every 10 seconds?) that caches the results, and show a cached result to the client. (even if the api calls lags, the client pageload wouldn’t be affected at all.)

PHP Curl request increase timeout, I have this php code that consumes an api. I have one request that takes a long time almost 4 minutes. The curl request times out and

PHP script to check http response time for a particualar URL

I am looking for a php script which should check for the http response time and if it fails to respond within x milliseconds, it should store this infom into a database with time. The failure may be due to the network problem from where the script is running. So, it should differentiate between the network downtime and server downtime.

«So, it should differentiate between the network downtime and server downtime.»

I doubt that’s possible. You’d have to execute a trace to the server and parse it’s output to see where the trace stops.

To check for the load time, you can simply use cURL ( http://php.net/curl ) and set a timeout value.

I doubt you could find a script to do exactly what you need, you have to build this yourself.

There’s likely other scripts created for this, but with a quick search here’s the first ones I found:

http://www.greenbird.info/xantus-webdevelopment/ping

http://220.237.146.14:6080/stech/products/thepinger.php

And at this site there’s a ping script as well as a traceroute script, may prove useful in troubleshooting the actual cause of the delay.

http://www.theworldsend.net/

I am using the following script, but I am getting the error message: Fatal error: Call to undefined function http_get()

$response = http_get(«http://www.abc.com», array(«timeout»=>1), $info);

print_r($info);

?>

If you have any other alternative script, please let me know. Please forgive my ignorance in php coding

All of life is about relationships, and EE has made a viirtual community a real community. It lifts everyone’s boat

Shiva_Kumar, which one of the 3 links I provided is that code from? The 3 links each have apparently functional ping solutions in PHP.

this code is not from any of the link. The link which you have provided contains the code to ping(though it is useful). However, what I am looking for is to send http response request and wait for some x milliseconds for the response and if it not responding I will assume it to be down.

The reason why am not using ping becuase we have given some files to the hosting company to host it and I want to check whether they are keeping our files all the time up or not by running the script which will store the data on mysql database based on the reports it has collected (ie., up or down). Ping will not give us the correct results because the server may be up but it may be responding slow(I may be wrong, please correct me if am wrong)

If you have any alternate code, please provide it to me. something like cURL code as rohypnol has mentioned in this post.