- Saved searches

- Use saved searches to filter your results more quickly

- kostasthanos/Hand-Detection-and-Finger-Counting

- Name already in use

- Sign In Required

- Launching GitHub Desktop

- Launching GitHub Desktop

- Launching Xcode

- Launching Visual Studio Code

- Latest commit

- Git stats

- Files

- README.md

- About

- Пишем систему распознавания ладони на Python OpenCV

- Что мы получим на выходе

- Полезные ссылки

- Перед работой

- Кодим

- Итог

Saved searches

Use saved searches to filter your results more quickly

You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. Reload to refresh your session. You switched accounts on another tab or window. Reload to refresh your session.

A python program for hand detection and finger counting using OpenCV library.

kostasthanos/Hand-Detection-and-Finger-Counting

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Name already in use

A tag already exists with the provided branch name. Many Git commands accept both tag and branch names, so creating this branch may cause unexpected behavior. Are you sure you want to create this branch?

Sign In Required

Please sign in to use Codespaces.

Launching GitHub Desktop

If nothing happens, download GitHub Desktop and try again.

Launching GitHub Desktop

If nothing happens, download GitHub Desktop and try again.

Launching Xcode

If nothing happens, download Xcode and try again.

Launching Visual Studio Code

Your codespace will open once ready.

There was a problem preparing your codespace, please try again.

Latest commit

Git stats

Files

Failed to load latest commit information.

README.md

Hand Detection and Finger Counting

This is a project made with Python and OpenCV library.

For a better and visual understanding of this project and it’s concepts, watch the video in Youtube or click on the image-link below.

Below there are some instructions and information about the most significant parts of this project.

Preparing the environment

Define a smaller window (test_window) inside the main frame which will be the ROI (Region of Interest).

Only inside this window the tests will be visible.

# Define the Region Of Interest (ROI) window top_left = (245, 50) bottom_right = (580, 395) cv2.rectangle(frame, (top_left[0]-5, top_left[1]-5), (bottom_right[0]+5, bottom_right[1]+5), (0,255,255), 3)

# Frame shape : height and width h, w = frame.shape[:2] # h, w = 480, 640

Focuse only to user’s hand. So in this part the hand must be isolated from the background.

Apply Gaussian Blur on the ROI.

test_window_blurred = cv2.GaussianBlur(test_window, (5,5), 0)

The window is in BGR format by default. Convert it to HSV format.

# Convert ROI only to HSV format hsv = cv2.cvtColor(test_window_blurred, cv2.COLOR_BGR2HSV)

In order to find user’s skin color (array values), user can modify the trackbars until the hand is the only thing that is visible. To enable trackbars window someone must define it before starting the program. So after importing the necessary packages add this part of code :

def nothing(x): pass cv2.namedWindow("trackbars") cv2.createTrackbar("Lower-H", "trackbars", 0, 179, nothing) cv2.createTrackbar("Lower-S", "trackbars", 0, 255, nothing) cv2.createTrackbar("Lower-V", "trackbars", 0, 255, nothing) cv2.createTrackbar("Upper-H", "trackbars", 179, 179, nothing) cv2.createTrackbar("Upper-S", "trackbars", 255, 255, nothing) cv2.createTrackbar("Upper-V", "trackbars", 255, 255, nothing)

After that is time to define a range for the colors, based on arrays.

# Find finger (skin) color using trackbars low_h = cv2.getTrackbarPos("Lower-H", "trackbars") low_s = cv2.getTrackbarPos("Lower-S", "trackbars") low_v = cv2.getTrackbarPos("Lower-V", "trackbars") up_h = cv2.getTrackbarPos("Upper-H", "trackbars") up_s = cv2.getTrackbarPos("Upper-S", "trackbars") up_v = cv2.getTrackbarPos("Upper-V", "trackbars") # Create a range for the colors (skin color) lower_color = np.array([low_h, low_s, low_v]) upper_color = np.array([up_h, up_s, up_v])

# Create a mask mask = cv2.inRange(hsv, lower_color, upper_color) cv2.imshow("Mask", mask) # Show mask frame

Computing the maximum contour and it’s convex hull

For each frame on the video capture, find the maximum contour inside the ROI.

if len(contours) > 0: # Find the maximum contour each time (on each frame) # --Max Contour-- max_contour = max(contours, key=cv2.contourArea) # Draw maximum contour (blue color) cv2.drawContours(test_window, max_contour, -1, (255,0,0), 3)

Convex Hull around max contour

# Find the convex hull "around" the max_contour # --Convex Hull-- convhull = cv2.convexHull(max_contour, returnPoints = True) # Draw convex hull (red color) cv2.drawContours(test_window, [convhull], -1, (0,0,255), 3, 2)

Finding the point with the minimum y-value

This is the highest point of the convex hull.

min_y = h # Set the minimum y-value equal to frame's height value final_point = (w, h) for i in range(len(convhull)): point = (convhull[i][0][0], convhull[i][0][1]) if point[1] min_y: min_y = point[1] final_point = point # Draw a circle (black color) to the point with the minimum y-value cv2.circle(test_window, final_point, 5, (0,0,0), 2)

Finding the center of the max_contour

The center of max contour is defined by the point (cx, cy) using cv2.moments().

M = cv2.moments(max_contour) # Moments # Find the center of the max contour if M["m00"]!=0: cX = int(M["m10"] / M["m00"]) cY = int(M["m01"] / M["m00"]) # Draw circle (red color) in the center of max contour cv2.circle(test_window, (cX, cY), 6, (0,0,255), 3)

Calculating the Defect points

Find and draw the polygon that is defined by the contour.

# --Contour Polygon-- contour_poly = cv2.approxPolyDP(max_contour, 0.01*cv2.arcLength(max_contour,True), True) # Draw contour polygon (white color) cv2.fillPoly(test_window, [max_contour], text_color)

The result of the command

defects = cv2.convexityDefects(contour_poly, hull)

is an array where each row contains the values:

- start point

- end point

- farthest point

- approximate distance to farthest point

Then find these points plus the mid points on each frame as below.

points = [] for i in range(defects.shape[0]): # Len of arrays start_index, end_index, far_pt_index, fix_dept = defects[i][0] start_pts = tuple(contour_poly[start_index][0]) end_pts = tuple(contour_poly[end_index][0]) far_pts = tuple(contour_poly[far_pt_index][0]) mid_pts = (int((start_pts[0]+end_pts[0])/2), int((start_pts[1]+end_pts[1])/2)) points.append(mid_pts) #--Start Points-- (yellow color) cv2.circle(test_window, start_pts, 2, (0,255,255), 2)

#--End Points-- (black color) cv2.circle(test_window, end_pts, 2, (0,0,0), 2)

#--Far Points-- (white color) cv2.circle(test_window, far_pts, 2, text_color, 2)

In order to do the finger counting we should find a way to check how many fingers are displayed. To do this we are calculating the angle between start point, defect point and end point as shown below.

# --Calculate distances-- # If p1 = (x1, y1) and p2 = (x2, y2) are two points, then the distance between them is # Dist : sqrt[(x2-x1)^2 + (y2-y1)^2] # Distance between the start and the end defect point a = math.sqrt((end_pts[0] - start_pts[0])**2 + (end_pts[1] - start_pts[1])**2) # Distance between the farthest (defect) point and the start point b = math.sqrt((far_pts[0] - start_pts[0])**2 + (far_pts[1] - start_pts[1])**2) # Distance between the farthest (defect) point and the end point c = math.sqrt((end_pts[0] - far_pts[0])**2 + (end_pts[1] - far_pts[1])**2) angle = math.acos((b**2 + c**2 - a**2) / (2*b*c)) # Find each angle # If angle > 90 then the farthest point is "outside the area of fingers" if angle 90: count += 1 frame[0:40, w-40:w] = (0)

for c in range(5): if count == c: cv2.putText(frame, str(count+1), (w-35,30), font, 2, text_color, 2) if len(points) 1 : frame[0:40, w-40:w] = (0) cv2.putText(frame, "1", (w-35,30), font, 2, text_color, 2)

This project is aiming on understanding topics such as contours, convex hull, contour polygon. Also it focuses on the defect points which we are finding on the detected hand.

For a better and visual understanding of this project and it’s concepts, watch the video : video.

About

A python program for hand detection and finger counting using OpenCV library.

Пишем систему распознавания ладони на Python OpenCV

Всем привет, сегодня я покажу как написать систему распознавания ладони на Python + OpenCV (26 строчек кода). Этот урок требует минимальных знаний OpenCV.

Что мы получим на выходе

Полезные ссылки

Перед работой

Установка происходит через пакетный менеджер:

Кодим

Я решил использовать mediapipe, потому что в нём есть уже обученная ИИ для распознания ладони.

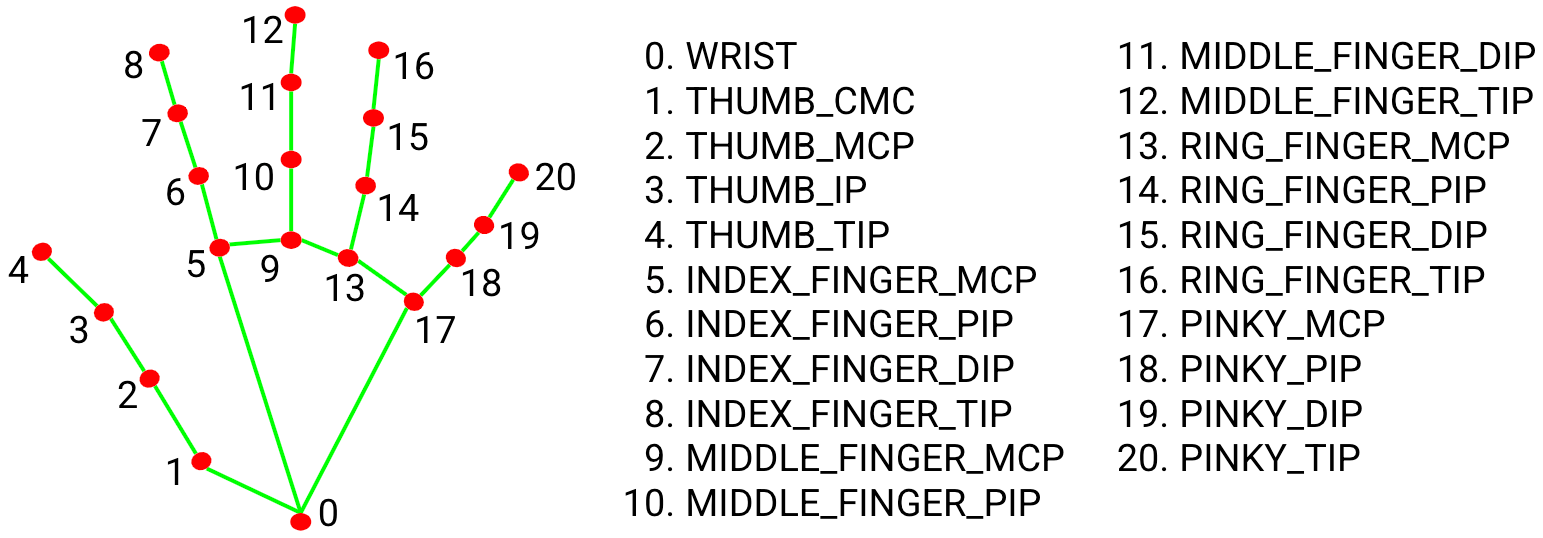

Для начало вам нужно понять, что ладонь состоит из суставов, которые можно выразить точками:

import cv2 import mediapipe as mp cap = cv2.VideoCapture(0) #Камера hands = mp.solutions.hands.Hands(max_num_hands=1) #Объект ИИ для определения ладони draw = mp.solutions.drawing_utils #Для рисование ладони while True: #Закрытие окна if cv2.waitKey(1) & 0xFF == 27: break success, image = cap.read() #Считываем изображение с камеры image = cv2.flip(image, -1) #Отражаем изображение для корекктной картинки imageRGB = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) #Конвертируем в rgb results = hands.process(imageRGB) #Работа mediapipe if results.multi_hand_landmarks: for handLms in results.multi_hand_landmarks: for id, lm in enumerate(handLms.landmark): h, w, c = image.shape cx, cy = int(lm.x * w), int(lm.y * h) draw.draw_landmarks(image, handLms, mp.solutions.hands.HAND_CONNECTIONS) #Рисуем ладонь cv2.imshow("Hand", image) #Отображаем картинкуРешение ошибки: ImportError: DLL load failed можно найти здесь.

В коде есть комментарии, но предлагаю разобрать подробно:

Импорты OpenCV и mediapipe.

import cv2 import mediapipe as mpНеобходимые объекты: камера (cap), нейронка для определения ладоней (hands) с аргументов max_num_hands — максимальное количество ладоней, — и наша «рисовалка» (draw).

cap = cv2.VideoCapture(0) hands = mp.solutions.hands.Hands(max_num_hands=1) draw = mp.solutions.drawing_utilsВыходим из цикла если нажата клавиша с номером 27 (Esc).

if cv2.waitKey(1) & 0xFF == 27: breakСчитываем изображение с камеры, отражаем изображение по вертикали и горизонтали, конвертируем в RGB. И, самое интересное, отдаём нашу картинку на определение ладоней.

success, image = cap.read() image = cv2.flip(image, -1) imageRGB = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) results = hands.process(imageRGB)Первый if-блок проверяет были ли вообще найдены ладони (любой не пустой объект является истинным). Далее циклом for мы «перебираем» объект с набором этих точек. Второй for — перебираем сами точки из набора

draw.draw_landmarks — удобная утилита, которая рисует ладонь на изображении, в аргументах: изображение, набор точек, и что рисуем (в нашем случае руку).

if results.multi_hand_landmarks: for handLms in results.multi_hand_landmarks: for id, lm in enumerate(handLms.landmark): h, w, c = image.shape cx, cy = int(lm.x * w), int(lm.y * h) draw.draw_landmarks(image, handLms, mp.solutions.hands.HAND_CONNECTIONS)Итог

Мы написали распознавание ладони на Python в 26 строк кода, круто, не так ли? Оцените этот пост ведь это важно для меня.