sklearn.metrics .confusion_matrix¶

Compute confusion matrix to evaluate the accuracy of a classification.

By definition a confusion matrix \(C\) is such that \(C_\) is equal to the number of observations known to be in group \(i\) and predicted to be in group \(j\) .

Thus in binary classification, the count of true negatives is \(C_\) , false negatives is \(C_\) , true positives is \(C_\) and false positives is \(C_\) .

Parameters : y_true array-like of shape (n_samples,)

Ground truth (correct) target values.

y_pred array-like of shape (n_samples,)

Estimated targets as returned by a classifier.

labels array-like of shape (n_classes), default=None

List of labels to index the matrix. This may be used to reorder or select a subset of labels. If None is given, those that appear at least once in y_true or y_pred are used in sorted order.

sample_weight array-like of shape (n_samples,), default=None

Normalizes confusion matrix over the true (rows), predicted (columns) conditions or all the population. If None, confusion matrix will not be normalized.

Returns : C ndarray of shape (n_classes, n_classes)

Confusion matrix whose i-th row and j-th column entry indicates the number of samples with true label being i-th class and predicted label being j-th class.

Plot the confusion matrix given an estimator, the data, and the label.

Plot the confusion matrix given the true and predicted labels.

Confusion Matrix visualization.

Wikipedia entry for the Confusion matrix (Wikipedia and other references may use a different convention for axes).

>>> from sklearn.metrics import confusion_matrix >>> y_true = [2, 0, 2, 2, 0, 1] >>> y_pred = [0, 0, 2, 2, 0, 2] >>> confusion_matrix(y_true, y_pred) array([[2, 0, 0], [0, 0, 1], [1, 0, 2]])

>>> y_true = ["cat", "ant", "cat", "cat", "ant", "bird"] >>> y_pred = ["ant", "ant", "cat", "cat", "ant", "cat"] >>> confusion_matrix(y_true, y_pred, labels=["ant", "bird", "cat"]) array([[2, 0, 0], [0, 0, 1], [1, 0, 2]])

In the binary case, we can extract true positives, etc. as follows:

>>> tn, fp, fn, tp = confusion_matrix([0, 1, 0, 1], [1, 1, 1, 0]).ravel() >>> (tn, fp, fn, tp) (0, 2, 1, 1)

sklearn.metrics .ConfusionMatrixDisplay¶

It is recommend to use from_estimator or from_predictions to create a ConfusionMatrixDisplay . All parameters are stored as attributes.

Parameters : confusion_matrix ndarray of shape (n_classes, n_classes)

display_labels ndarray of shape (n_classes,), default=None

Display labels for plot. If None, display labels are set from 0 to n_classes — 1 .

Attributes : im_ matplotlib AxesImage

Image representing the confusion matrix.

text_ ndarray of shape (n_classes, n_classes), dtype=matplotlib Text, or None

Array of matplotlib axes. None if include_values is false.

ax_ matplotlib Axes

Axes with confusion matrix.

figure_ matplotlib Figure

Figure containing the confusion matrix.

Compute Confusion Matrix to evaluate the accuracy of a classification.

Plot the confusion matrix given an estimator, the data, and the label.

Plot the confusion matrix given the true and predicted labels.

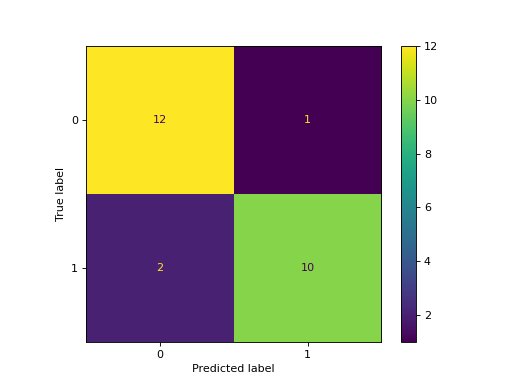

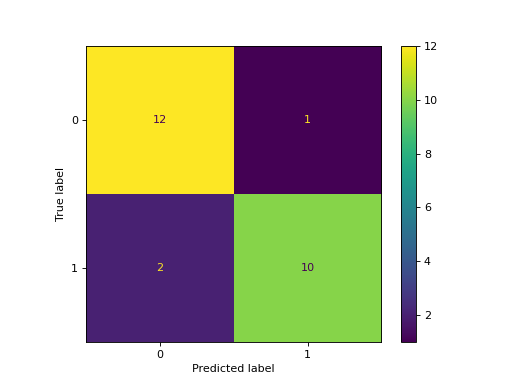

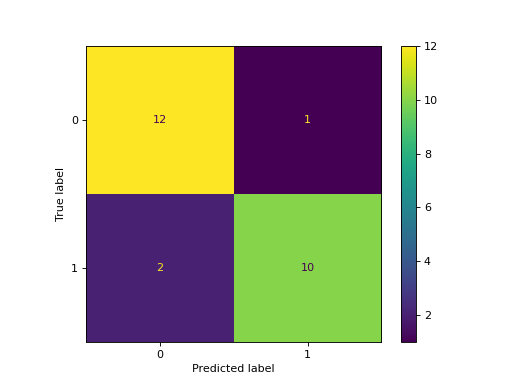

>>> import matplotlib.pyplot as plt >>> from sklearn.datasets import make_classification >>> from sklearn.metrics import confusion_matrix, ConfusionMatrixDisplay >>> from sklearn.model_selection import train_test_split >>> from sklearn.svm import SVC >>> X, y = make_classification(random_state=0) >>> X_train, X_test, y_train, y_test = train_test_split(X, y, . random_state=0) >>> clf = SVC(random_state=0) >>> clf.fit(X_train, y_train) SVC(random_state=0) >>> predictions = clf.predict(X_test) >>> cm = confusion_matrix(y_test, predictions, labels=clf.classes_) >>> disp = ConfusionMatrixDisplay(confusion_matrix=cm, . display_labels=clf.classes_) >>> disp.plot() >>> plt.show()

Plot Confusion Matrix given an estimator and some data.

Plot Confusion Matrix given true and predicted labels.

Plot Confusion Matrix given an estimator and some data.

Fitted classifier or a fitted Pipeline in which the last estimator is a classifier.

X of shape (n_samples, n_features)

y array-like of shape (n_samples,)

labels array-like of shape (n_classes,), default=None

List of labels to index the confusion matrix. This may be used to reorder or select a subset of labels. If None is given, those that appear at least once in y_true or y_pred are used in sorted order.

sample_weight array-like of shape (n_samples,), default=None

normalize , default=None

Either to normalize the counts display in the matrix:

- if ‘true’ , the confusion matrix is normalized over the true conditions (e.g. rows);

- if ‘pred’ , the confusion matrix is normalized over the predicted conditions (e.g. columns);

- if ‘all’ , the confusion matrix is normalized by the total number of samples;

- if None (default), the confusion matrix will not be normalized.

Target names used for plotting. By default, labels will be used if it is defined, otherwise the unique labels of y_true and y_pred will be used.

include_values bool, default=True

Includes values in confusion matrix.

xticks_rotation or float, default=’horizontal’

values_format str, default=None

Format specification for values in confusion matrix. If None , the format specification is ‘d’ or ‘.2g’ whichever is shorter.

cmap str or matplotlib Colormap, default=’viridis’

Colormap recognized by matplotlib.

ax matplotlib Axes, default=None

Axes object to plot on. If None , a new figure and axes is created.

colorbar bool, default=True

Whether or not to add a colorbar to the plot.

im_kw dict, default=None

Dict with keywords passed to matplotlib.pyplot.imshow call.

text_kw dict, default=None

Dict with keywords passed to matplotlib.pyplot.text call.

Plot the confusion matrix given the true and predicted labels.

>>> import matplotlib.pyplot as plt >>> from sklearn.datasets import make_classification >>> from sklearn.metrics import ConfusionMatrixDisplay >>> from sklearn.model_selection import train_test_split >>> from sklearn.svm import SVC >>> X, y = make_classification(random_state=0) >>> X_train, X_test, y_train, y_test = train_test_split( . X, y, random_state=0) >>> clf = SVC(random_state=0) >>> clf.fit(X_train, y_train) SVC(random_state=0) >>> ConfusionMatrixDisplay.from_estimator( . clf, X_test, y_test) >>> plt.show()

classmethod from_predictions ( y_true , y_pred , * , labels = None , sample_weight = None , normalize = None , display_labels = None , include_values = True , xticks_rotation = ‘horizontal’ , values_format = None , cmap = ‘viridis’ , ax = None , colorbar = True , im_kw = None , text_kw = None ) [source] ¶

Plot Confusion Matrix given true and predicted labels.

y_pred array-like of shape (n_samples,)

The predicted labels given by the method predict of an classifier.

labels array-like of shape (n_classes,), default=None

List of labels to index the confusion matrix. This may be used to reorder or select a subset of labels. If None is given, those that appear at least once in y_true or y_pred are used in sorted order.

sample_weight array-like of shape (n_samples,), default=None

normalize , default=None

Either to normalize the counts display in the matrix:

- if ‘true’ , the confusion matrix is normalized over the true conditions (e.g. rows);

- if ‘pred’ , the confusion matrix is normalized over the predicted conditions (e.g. columns);

- if ‘all’ , the confusion matrix is normalized by the total number of samples;

- if None (default), the confusion matrix will not be normalized.

Target names used for plotting. By default, labels will be used if it is defined, otherwise the unique labels of y_true and y_pred will be used.

include_values bool, default=True

Includes values in confusion matrix.

xticks_rotation or float, default=’horizontal’

values_format str, default=None

Format specification for values in confusion matrix. If None , the format specification is ‘d’ or ‘.2g’ whichever is shorter.

cmap str or matplotlib Colormap, default=’viridis’

Colormap recognized by matplotlib.

ax matplotlib Axes, default=None

Axes object to plot on. If None , a new figure and axes is created.

colorbar bool, default=True

Whether or not to add a colorbar to the plot.

im_kw dict, default=None

Dict with keywords passed to matplotlib.pyplot.imshow call.

text_kw dict, default=None

Dict with keywords passed to matplotlib.pyplot.text call.

Plot the confusion matrix given an estimator, the data, and the label.

>>> import matplotlib.pyplot as plt >>> from sklearn.datasets import make_classification >>> from sklearn.metrics import ConfusionMatrixDisplay >>> from sklearn.model_selection import train_test_split >>> from sklearn.svm import SVC >>> X, y = make_classification(random_state=0) >>> X_train, X_test, y_train, y_test = train_test_split( . X, y, random_state=0) >>> clf = SVC(random_state=0) >>> clf.fit(X_train, y_train) SVC(random_state=0) >>> y_pred = clf.predict(X_test) >>> ConfusionMatrixDisplay.from_predictions( . y_test, y_pred) >>> plt.show()

plot ( * , include_values = True , cmap = ‘viridis’ , xticks_rotation = ‘horizontal’ , values_format = None , ax = None , colorbar = True , im_kw = None , text_kw = None ) [source] ¶

Parameters : include_values bool, default=True

Includes values in confusion matrix.

cmap str or matplotlib Colormap, default=’viridis’

Colormap recognized by matplotlib.

xticks_rotation or float, default=’horizontal’

values_format str, default=None

Format specification for values in confusion matrix. If None , the format specification is ‘d’ or ‘.2g’ whichever is shorter.

ax matplotlib axes, default=None

Axes object to plot on. If None , a new figure and axes is created.

colorbar bool, default=True

Whether or not to add a colorbar to the plot.

im_kw dict, default=None

Dict with keywords passed to matplotlib.pyplot.imshow call.

text_kw dict, default=None

Dict with keywords passed to matplotlib.pyplot.text call.

Returns a ConfusionMatrixDisplay instance that contains all the information to plot the confusion matrix.

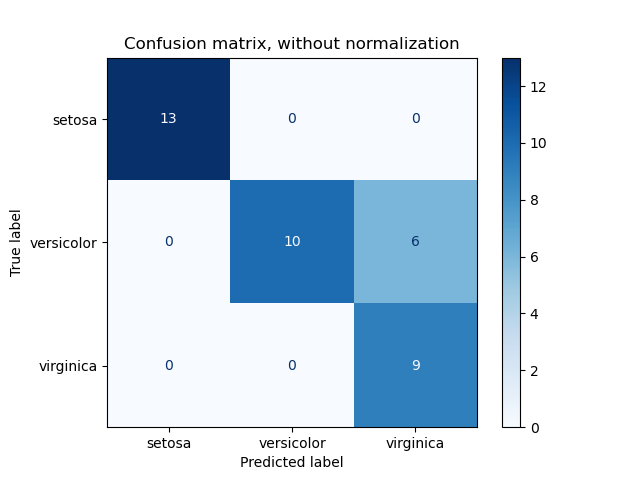

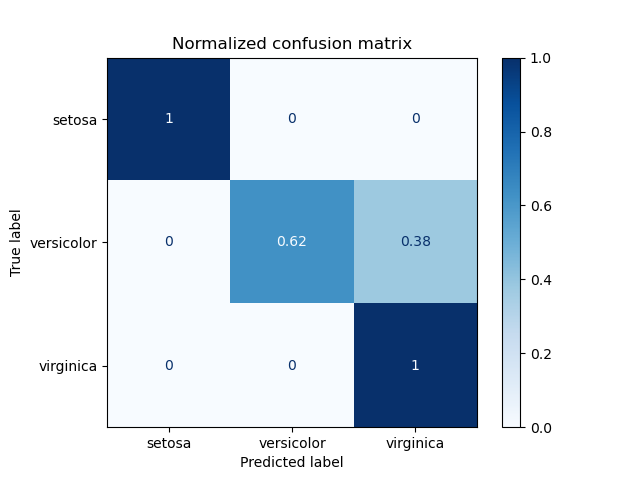

Confusion matrix¶

Example of confusion matrix usage to evaluate the quality of the output of a classifier on the iris data set. The diagonal elements represent the number of points for which the predicted label is equal to the true label, while off-diagonal elements are those that are mislabeled by the classifier. The higher the diagonal values of the confusion matrix the better, indicating many correct predictions.

The figures show the confusion matrix with and without normalization by class support size (number of elements in each class). This kind of normalization can be interesting in case of class imbalance to have a more visual interpretation of which class is being misclassified.

Here the results are not as good as they could be as our choice for the regularization parameter C was not the best. In real life applications this parameter is usually chosen using Tuning the hyper-parameters of an estimator .

Confusion matrix, without normalization [[13 0 0] [ 0 10 6] [ 0 0 9]] Normalized confusion matrix [[1. 0. 0. ] [0. 0.62 0.38] [0. 0. 1. ]]

import matplotlib.pyplot as plt import numpy as np from sklearn import datasets, svm from sklearn.metrics import ConfusionMatrixDisplay from sklearn.model_selection import train_test_split # import some data to play with iris = datasets.load_iris() X = iris.data y = iris.target class_names = iris.target_names # Split the data into a training set and a test set X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=0) # Run classifier, using a model that is too regularized (C too low) to see # the impact on the results classifier = svm.SVC(kernel="linear", C=0.01).fit(X_train, y_train) np.set_printoptions(precision=2) # Plot non-normalized confusion matrix titles_options = [ ("Confusion matrix, without normalization", None), ("Normalized confusion matrix", "true"), ] for title, normalize in titles_options: disp = ConfusionMatrixDisplay.from_estimator( classifier, X_test, y_test, display_labels=class_names, cmap=plt.cm.Blues, normalize=normalize, ) disp.ax_.set_title(title) print(title) print(disp.confusion_matrix) plt.show()

Total running time of the script: ( 0 minutes 0.182 seconds)