Using Python to insert JSON into PostgreSQL [closed]

This question does not appear to belong here. Either it’s not database-related or it otherwise conflicts with the scope of our site. See What topics can I ask about here?, What types of questions should I avoid asking? or this blog post for more info.

create table json_table ( p_id int primary key, first_name varchar(20), last_name varchar(20), p_attribute json, quote_content text ) Now I basically want to load a json object with the help of a python script and let the python script insert the json into the table. I have achieved inserting a JSON via psql, but its not really inserting a JSON-File, it’s more of inserting a string equivalent to a JSON file and PostgreSQL just treats it as json. What I’ve done with psql to achieve inserting JSON-File: Reading the file and loading the contents into a variable

\set content type C:\PATH\data.json insert into json_table select * from json_populate_recordset(NULL:: json_table, :'content'); This works well but I want my python script to do the same. In the following Code, the connection is already established:

connection = psycopg2.connect(connection_string) cursor = connection.cursor() cursor.execute("set search_path to public") with open('data.json') as file: data = json.load(file) query_sql = """ insert into json_table select * from json_populate_recordset(NULL::json_table, '<>'); """.format(data) cursor.execute(query_sql) Traceback (most recent call last): File "C:/PATH", line 24, in main() File "C:/PATH", line 20, in main cursor.execute(query_sql) psycopg2.errors.SyntaxError: syntax error at or near "p_id" LINE 3: json_populate_recordset(NULL::json_table, '[ If I paste the JSON content in pgAdmin4 and use the string inside json_populate_recordset() it works. I assume im handling the JSON file wrong. My data.json looks like this:

[ < "p_id": 1, "first_name": "Jane", "last_name": "Doe", "p_attribute": < "age": "37", "hair_color": "blue", "profession": "example", "favourite_quote": "I am the classic example" >, "quote_content": "'am':2 'classic':4 'example':5 'i':1 'the':3" >, < "p_id": 2, "first_name": "Gordon", "last_name": "Ramsay", "p_attribute": < "age": "53", "hair_color": "blonde", "profession": "chef", "favourite_quote": "Where is the lamb sauce?!" >, "quote_content": "'is':2 'lamb':4 'sauce':5 'the':3 'where':1" > ] Insert JSON data into PostgreSQL DB using Python

Now that the table has been created and we have inspected the JSON data returned by the Observepoint API we are finally ready to load the data. First up are a couple of functions used to open and close the database connection. The database connection object will be used in the function created to insert the data. Information used to put together these functions was gathered from observepoint API documentation, postgresqltutorial.com, and the standard psycopg2 documentation.

Opening and closing the connection:

#!/usr/bin/python

#module name: queryFunc.py

def openConn ( ) :

conn = None

try :

# connect to the PostgreSQL server

print ( 'Connecting to the PostgreSQL database. ' )

conn = psycopg2. connect ( host = "localhost" , database = "YOURDATABASE" , user = "YOURUSERNAME" , password = "YOURPASSWORD" )

cur = conn. cursor ( )

cur. execute ( 'SELECT version()' )

print ( 'CONNECTION OPEN:PostgreSQL database version:' )

# display the PostgreSQL database server version

db_version = cur. fetchone ( )

print ( db_version )

except ( Exception , psycopg2. DatabaseError ) as error:

print ( error )

def closeConn ( conn ) :

try :

conn. close ( )

print ( 'Closed connection to the PostgreSQL database' )

except ( Exception , psycopg2. DatabaseError ) as error:

print ( error )

finally :

if conn is not None :

conn. close ( )

print ( 'Database connection closed.' )

Loading the data, function used to insert each line:

def insertJourney ( c , j ) :

"""insertJourney()START Connect to the PostgreSQL database server """

try :

sql = """

INSERT INTO journeys (jname, lastcheck, queued, folderid, journeyoptions,

webjourneyrunning, journeyid, status, screenshot, createdat,

domainId, actions, nextcheck, userid, emails)

VALUES(%s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s, %s)

"""

# create a cursor

cur = c. cursor ( )

cur. execute ( sql , j )

c. commit ( )

#print('inserted')

except ( Exception , psycopg2. DatabaseError ) as error:

print ( error )

Loading the data, function used to call Observepoint and insert each line with the insertJourney function while parsing the returned JSON. The non string column values are formatted with Python so PostgreSQL understands the insert:

def loadJourneys ( c ) :

print ( ':loadJourneys() start' )

conn = http. client . HTTPSConnection ( "api.observepoint.com" )

payload = "<>"

headers = { 'authorization' : "api_key YOURAPIKEY" }

conn. request ( "GET" , "/v2/web-journeys" , payload , headers )

res = conn. getresponse ( )

data = res. read ( )

de_data = data. decode ( "utf-8" )

jdata = json. loads ( de_data )

for rownum , drow in enumerate ( jdata , 1 ) :

insertvalues = [ ]

#print('drow:' + str(rownum), end=' ')

#ilist is the list of items to include

ilist = [ 'name' , 'lastCheck' , 'queued' , 'folderId' , 'options' , 'webJourneyRunning' , 'id' , 'status' , 'screenshot' , 'createdAt' , 'domainId' , 'actions' , 'nextCheck' , 'userId' , 'emails' ]

#dtlist is for dates

dtlist = [ 'lastCheck' , 'createdAt' , 'nextCheck' ]

#dumplist is for dict

dumplist = [ 'options' ]

for dfield in drow:

if dfield in ilist:

if dfield in dtlist:

insertvalues. append ( drow. get ( dfield ) [ 0 :- 10 ] )

elif dfield in dumplist:

json_string = json. dumps ( drow. get ( dfield ) )

insertvalues. append ( json_string )

else :

insertvalues. append ( drow. get ( dfield ) )

#print('insertvalues:', end='')

#print(insertvalues)

queryFunc. insertJourney ( c , insertvalues )

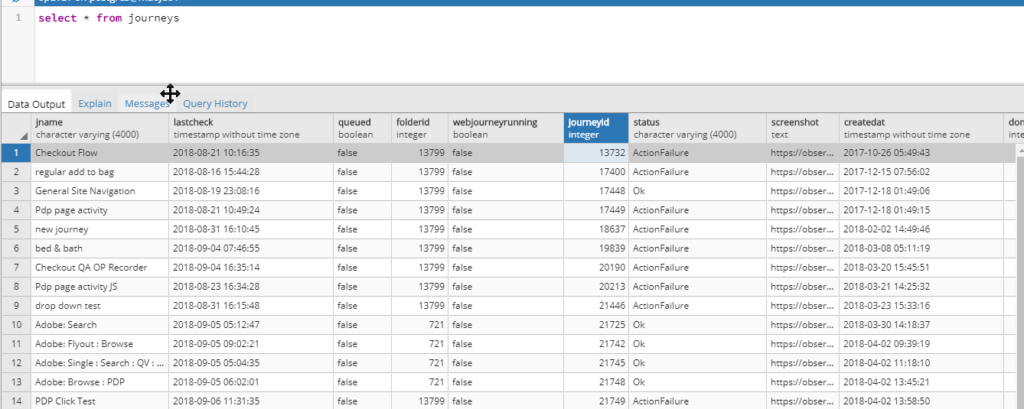

This is a sample of what it looks like in pgadmin:

Psycopg2 – Insert dictionary as JSON

In this article, we are going to see how to insert a dictionary as JSON using Psycopg2 and Python.

Python dict objects can be or rather should be stored in database tables as JSON datatype. Since most of the SQL databases have a JSON datatype. This allows using these objects or key-value pairs in the front-end or in an API to process the request that is being placed from them respectively.

Setting up a PostgreSQL Database and Table:

Let us create a schema in PostgreSQL and add a table to it with at least one JSON type column. We can use the following SQL script to create the same.

In the above SQL script, we have created a schema name “geeks”. A table “json_example ” is created in the schema which consists of two columns, one which is the primary key has column name “id” whereas the other column has name “json_col ” which is of type JSON.

Inserting a python dict object in PostgreSQL table using psycopg2 library:

Python

After running the above python file, we can head to the pgAdmin to view the following output in json_table table under public schema. You can run the following SQL script in the Query tool –

Explanation:

The above code is written in a functional format for a better representation of the underlying steps that take place in the entire process. The get_connection() function returns the connection object to the PostgreSQL table using which we can establish a connection to the database for our operations. If the connection object cannot be established, it will return False. Now, we have a python dict object created in variable name dict_obj. We will insert this dict object into the database. But, before we insert this dict object, we need to convert the object in JSON format since the database understands JSON format and not the python dict object. Python’s in-built module json is used to convert the dict object in a JSON string format using the dumps() method. Now that we have the JSON string and the connection object, we can insert the data in the database table json_table. For this purpose, we have created a function names insert_value() which takes in 3 arguments, namely, id for the value to be inserted in id column, json_col for the value that needs to be inserted in the json_col column, and the conn parameter for providing the connection object created earlier. We can see that The insert_value() function runs the usual INSERT SQL script using the connection object. The connection is closed once the data is been inserted to the table.

Using the psycopg2 Json adaptation

The following code demonstrates how psycopg2’s Json adaptation can be used instead of the standard json.dumps(). In order to pass a Python object to the database as a query argument, you can use the Json adapter imported from psycopg2.extras.