- Http POST Curl in python

- 2 Answers 2

- How to Use cURL in Python

- What is cURL?

- How to Use cURL in Python

- PycURL Library Installing

- Python cURL Examples

- GET request

- POST request

- PUT request

- DELETE request

- Downloading files

- Using Proxy in PycURL

- Tired of getting blocked while scraping the web?

- Get structured data in the format you need!

- Conclusion and Takeaways

- Tired of getting blocked while scraping the web?

- Get structured data in the format you need!

Http POST Curl in python

I’m having trouble understanding how to issue an HTTP POST request using curl from inside of python. I’m tying to post to facebook open graph. Here is the example they give which I’d like to replicate exactly in python.

curl -F 'access_token=. ' \ -F 'message=Hello, Arjun. I like this new API.' \ https://graph.facebook.com/arjun/feed 2 Answers 2

You can use httplib to POST with Python or the higher level urllib2

import urllib params = <> params['access_token'] = '*****' params['message'] = 'Hello, Arjun. I like this new API.' params = urllib.urlencode(params) f = urllib.urlopen("https://graph.facebook.com/arjun/feed", params) print f.read() There is also a Facebook specific higher level library for Python that does all the POST-ing for you.

Why do you use curl in the first place?

Python has extensive libraries for Facebook and included libraries for web requests, calling another program and receive output is unecessary.

data may be a string specifying additional data to send to the server, or None if no such data is needed. Currently HTTP requests are the only ones that use data; the HTTP request will be a POST instead of a GET when the data parameter is provided. data should be a buffer in the standard application/x-www-form-urlencoded format. The urllib.urlencode() function takes a mapping or sequence of 2-tuples and returns a string in this format. urllib2 module sends HTTP/1.1 requests with Connection:close header included.

import urllib2, urllib parameters = <> parameters['token'] = 'sdfsdb23424' parameters['message'] = 'Hello world' target = 'http://www.target.net/work' parameters = urllib.urlencode(parameters) handler = urllib2.urlopen(target, parameters) while True: if handler.code < 400: print 'done' # call your job break elif handler.code >= 400: print 'bad request or error' # failed break How to Use cURL in Python

Almost every person who uses the Internet works with cURL invisibly on a daily basis. Because of its flexibility and freeness, cURL is widely used everywhere: from cars and TVs to routers and printers.

What is cURL?

- HTTP and HTTPS. Designated to transfer text data between the client and the server. The main difference between them is that HTTPS has encryption of the transmitted data.

- FTP, FTPS, and SFTP. Designated to transfer files over a network. FTPS is a secure file transfer protocol that uses SSL/TLS technologies to encrypt its communication channels. SFTP is a protocol that transfers files using SSH technology.

- IMAP and IMAPS (IMAP over SSL) — application layer protocol for email access.

- POP3 and POP3S (POP3 over SSL) — protocol for receiving email messages.

- SMB — application layer network protocol for remote access to files, printers, and other network resources.

- SCP — protocol for copying files between computers using encrypted SSH as transport.

- TELNET — network protocol for remote access to a computer using a command interpreter.

- GOPHER — network protocol for distributed search and transfer of documents.

- LDAP and LDAPS (LDAP over SSL) — protocol used to authenticate directory services.

- SMTP and SMTPS (SMTP over SSL) — network protocol for e-mail transfer.

Also, cURL supports HTTPS authentication, HTTP post, FTP upload, proxy, cookies, and username + passwords.

Get fast, real-time access to structured Google search results with our SERP API. No blocks or CAPTCHAs — ever. Streamline your development process without worrying…

Use scraping of complete business information along with reviews, photos, addresses, ratings, popular places and more from Google Maps. Download ready structured…

cURL is a cross-platform command-line utility, so it can be used on any operating system. To check if cURL is installed, go to the cmd (command line) and type curl -V:

C:\Users\Admin>curl -V curl 7.79.1 (Windows) libcurl/7.79.1 Schannel Release-Date: 2021-09-22 Protocols: dict file ftp ftps http https imap imaps pop3 pop3s smtp smtps telnet tftp Features: AsynchDNS HSTS IPv6 Kerberos Largefile NTLM SPNEGO SSL SSPI UnixSockets C:\Users\Admin>For example, to get HTML code, one can write using a command-line tool:

C:\Users\Admin>curl example.com Example Domain

This domain is for use in illustrative examples in documents. You may use this domain in literature without prior coordination or asking for permission.

More information.

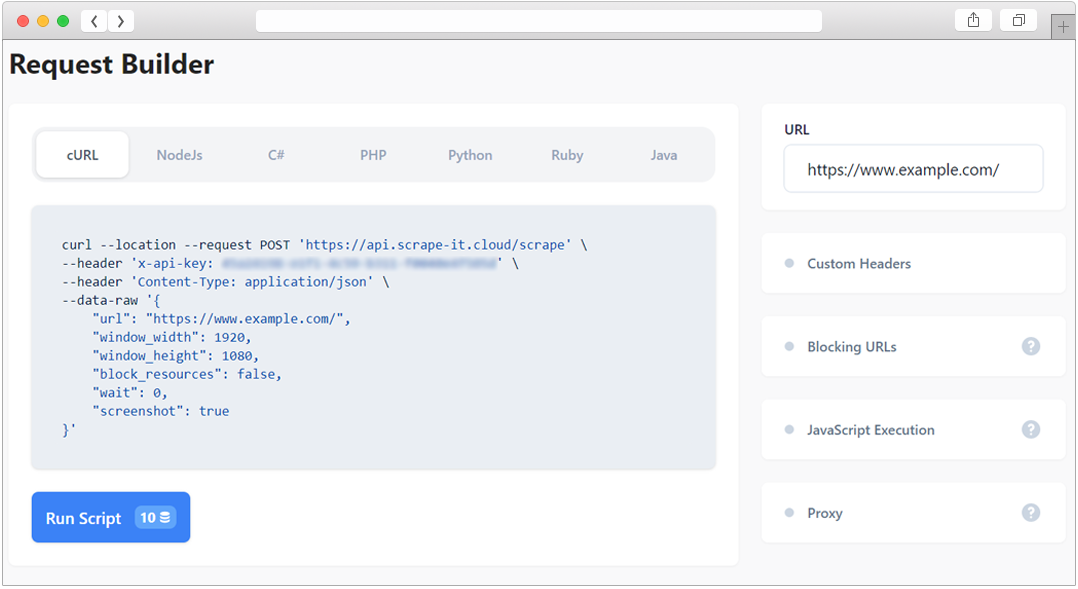

C:\Users\Admin>To write a web page scraper on cURL, one can use our API, which helps to scrape pages. Just fill in the required fields, and then use as needed: either run from the site or paste the code into the program.

However, as a rule, without such tools, cURL is not enough because it necessary as a part of the Python program. Therefore, an API to the cURL functionality, libCURL library, was created. For Python, there is a wrapper for libCURL called pyCURL. So, pyCURL is a curl to Python.

How to Use cURL in Python

To begin with, it is worth saying that there are many services that can translate cURL commands into code. This option is suitable for those who already have experience in writing commands but who need program code.

However, it is worth noting that such services usually use the standard library for requests on Python — Requests library. And although this approach is somewhat limiting, it may suit some.

Another way is to use PycURL and write program code oneself.

PycURL Library Installing

As was mentioned, PycURL being a thin wrapper above libcURL, inherits all the features of libcURL. For example, PycURL is extremely fast (much faster than Requests, which is a Python library for HTTP requests), has multiprotocol support, and also contains sockets to support network operations. Moreover, PycURl supports the same data protocols as a cURL.

To install PycURL, go to cmd and write the next command:

If there are problems with installation by command pip install, one can go to the official PycURL site, where the latest version of installation files are located.

Python cURL Examples

The most common uses are the GET, POST, PUT, and DELETE functions.

GET request

The simplest code sample of using PycURL is getting data with a GET request. To do this, it will be necessary to connect one more module — BytesIO (a stream that uses a buffer of bytes in memory).

import pycurl from io import BytesIOAfter that, one needs to declare the objects used:

b_obj = BytesIO() crl = pycurl.Curl()crl.setopt(crl.URL, 'https://example.com/get)Then open transfer, get data, and display it:

# To write bytes using charset utf 8 encoding crl.setopt(crl.WRITEDATA, b_obj) # Start transfer crl.perform() # End curl session crl.close() # Get the content stored in the BytesIO object (in byte characters) get_body = b_obj.getvalue() # Decode the bytes and print the result print('Output of GET request:\n%s' % get_body.decode('utf8'))Remember that JSON data will be displayed. If there are errors during the upload process, the response code will be returned. For example, status code 404 means the page wasn’t found.

POST request

POST Request allows sending data to the server. It’s just like GET is an HTTP request. There are two different ways to send data using the POST method: sending text data and sending a file.

Firstly, import Pycurl and urllib for encoding and declare the objects used:

import pycurl from urllib.parse import urlencode crl = pycurl.Curl() crl.setopt(crl.URL, 'https://example.com/post')Then set HTTP request method to POST and data to send in the request body:

post_data = postfields = urlencode(post_data) crl.setopt(crl.POSTFIELDS, postfields)And the last step — perform POST:

Sending data from the physical file is similar:

import pycurl crl = pycurl.Curl() crl.setopt(crl.URL, 'https://example.com/post') crl.setopt(crl.HTTPPOST,[('fileupload',(clr.FORM_FILE, __file__, )),]) clr.perform() clr.close()If the file data is in memory, one can use BUFFER/BUFFERPTR in his code:

clr.setopt(clr.HTTPPOST, [('fileupload', (clr.FORM_BUFFER, 'readme.txt', clr.FORM_BUFFERPTR, 'This is a readme file', )), ])Another code will be the same.

PUT request

A PUT request is like a POST request. Their difference is that PUT can be used to upload a file in the body of the request. At the same time, PUT can be used both to create and overwrite a file at a given address. When using PUT with PycURL, it is important to remember that the file must be open at the time of transfer.

This part is similar to the POST method:

import pycurl clr = pycurl.Curl() clr.setopt(clr.URL, 'https://example.com/put')Then one needs to open and read the file:

clr.setopt(clr.UPLOAD, 1) file = open('body.json') clr.setopt(clr.READDATA, file)After that, transferring data can be started:

And only at the end the file can be closed:

DELETE request

And the last example is an HTTP DELETE request. It sends a request to delete the target resource to the server:

import pycurl crl = pycurl.Curl() crl.setopt(crl.URL, "http://example.com/items/item34") crl.setopt(crl.CUSTOMREQUEST, "DELETE") crl.perform() crl.close()Downloading files

Sometimes it happens that the received data need to be written to a file. For this, one can use the same code as for transferring data from a file, with one exception — function setopt uses not READDATA, but WRITEDATA:

crl.setopt(crl.WRITEDATA, file)Zillow API Python is a Python library that provides convenient access to the Zillow API. It allows developers to retrieve real estate data such as property details,…

Our Shopify Node API offers a seamless solution, allowing you to get data from these Shopify stores without the need to navigate the complexities of web scraping,…

Using Proxy in PycURL

CURL proxy is a curl utility key that allows one to send an HTTP request indirectly through a proxy server. In other words, this is an indispensable thing for web scraping.

Tired of getting blocked while scraping the web?

Try out Web Scraping API with proxy rotation, CAPTCHA bypass, and Javascript rendering.

Get structured data in the format you need!

We offer customized web scraping solutions that can provide any data you need, on time and with no hassle!

- Regular, custom data delivery

- Pay after you receive sample dataset

- A range of output formats

The proxy setting is relevant for parsing a large amount of data. By sending hundreds of requests per minute from a single IP address, there is a chance of getting blocked.

At the server level, protection is activated to prevent DoS attacks. Using different cURL proxies will solve this problem and allow to scrape data without the risk of blocking.

To use a proxy, one needs to install certifi library:

But in most cases, users already have it because it is a built-in library:

C:\Users\Admin>pip install certifi Requirement already satisfied: certifi in c:\users\admin\appdata\local\programs\python\python310\lib\site-packages (2022.5.18.1) C:\Users\Admin>To use it, import certifi in the project:

Python 3.10.5 (tags/v3.10.5:f377153, Jun 6 2022, 16:14:13) [MSC v.1929 64 bit (AMD64)] on win32 Type "help", "copyright", "credits" or "license" for more information. >>> import certifi >>> _To use it in the program, first of all, import all libraries:

import pycurl from io import BytesIO import certifidef set_proxy(self, proxy): if proxy: logger.debug('PROXY SETTING PROXY %s', proxy) self.get_con.setopt(pycurl.PROXY, proxy) self.post_con.setopt(pycurl.PROXY, proxy) self.put_con.setopt(pycurl.PROXY, proxy) Conclusion and Takeaways

CURL is a handy query utility that supports most transfer protocols. The LibcURL API was created for its use in one’s own programs. And for use in Python was created a thin wrapper above libcURL which is called PycURL.

With the help of this library, it is possible to use all requests and work with all protocols supported by cURL. At the same time, the PycURL library is much faster than its Python analog, the Requests library.

Tired of getting blocked while scraping the web?

Try out Web Scraping API with proxy rotation, CAPTCHA bypass, and Javascript rendering.

Get structured data in the format you need!

We offer customized web scraping solutions that can provide any data you need, on time and with no hassle!

- Regular, custom data delivery

- Pay after you receive sample dataset

- A range of output formats

Valentina Skakun

I’m a technical writer who believes that data parsing can help in getting and analyzing data. I’ll tell about what parsing is and how to use it.